Public Channels

- # boston-meetup

- # dagster-integration

- # data-council-meetup

- # dev-discuss

- # general

- # github-discussions

- # github-notifications

- # gx-integration

- # jobs

- # london-meetup

- # mark-grover

- # nyc-meetup

- # open-lineage-plus-bacalhau

- # providence-meetup

- # sf-meetup

- # spark-support-multiple-scala-versions

- # spec-compliance

- # toronto-meetup

- # user-generated-metadata

Private Channels

Direct Messages

Group Direct Messages

This is the official start of the OpenLineage initiative. Thank you all for joining. First item is to provide feedback on the doc: https://docs.google.com/document/d/1qL_mkd9lFfe_FMoLTyPIn80-fpvZUAdEIfrabn8bfLE/edit

Thanks all for joining. In addition to the google doc, I have opened a pull request with an initial openapi spec: https://github.com/OpenLineage/OpenLineage/pull/1 The goal is to specify the initial model (just plain lineage) that will be extended with various facets. It does not intend to restrict to HTTP. Those same PUT calls without output can be translated to any async protocol

For reference, the slides of the kickoff meeting: https://docs.google.com/presentation/d/1bOnm4J7y1JRJBJtSImm-3vvXzvqqkL-UsCShAuub5oU/edit?usp=sharing

Am I the only weirdo that would prefer a Google Group mailing list to Slack for communicating?

*Thread Reply:* I think that is better for keeping people engaged, since it isn't just a ton of history to go through

*Thread Reply:* And I think it is also better for having thoughtful design discussions

*Thread Reply:* I’m happy to create a google group if that would help.

*Thread Reply:* Here it is: https://groups.google.com/g/openlineage

*Thread Reply:* Slack is more of a way to nudge discussions along, we can use github issues or the mailing list to discuss specific points

*Thread Reply:* @Ryan Blue and @Wes McKinney any recommendations on automating sending github issues update to that list?

*Thread Reply:* I don't really know how to do that

*Thread Reply:* @Julien Le Dem How about using Github discussions. They are specifically meant to solve this problem. Feature is still in beta, but it be enabled from repository settings. One positive side i see is that it will really easy to follow through and one separate place to go and look for discussions and ideas which are being discussed.

*Thread Reply:* I just enabled it: https://github.com/OpenLineage/OpenLineage/discussions

*Thread Reply:* the plan is to use github issues for discussions on the spec. This is to supplement

@Victor Shafran has joined the channel

👋 Hi everyone!

@Zhamak Dehghani has joined the channel

I’ve opened a github issue to propose OpenAPI as the way to define the lineage metadata: https://github.com/OpenLineage/OpenLineage/issues/2 I have also started a thread on the OpenLineage group: https://groups.google.com/g/openlineage/c/2i7ogPl1IP4 Discussion should happen there: ^

@Evgeny Shulman has joined the channel

FYI I have updated the PR with a simple genrator: https://github.com/OpenLineage/OpenLineage/pull/1

@Daniel Henneberger has joined the channel

Please send me your github ids if you wish to be added to the github repo

@Fabrice Etanchaud has joined the channel

As mentioned on the mailing List, the initial spec is ready for a final review. Thanks for all who gave feedback so far.

*Thread Reply:* https://github.com/OpenLineage/OpenLineage/pull/1

The next step will be to define individual facets

I have opened a PR to update the ReadMe: https://openlineage.slack.com/archives/C01EB6DCLHX/p1607835827000100

👋

I’m planning to merge https://github.com/OpenLineage/OpenLineage/pull/1 soon. That will be the base that we can iterate on and will enable starting the discussion on individual facets

Thank you all for the feedback. I have made an update to the initial spec adressing the final comments

*Thread Reply:* https://github.com/OpenLineage/OpenLineage/pull/1

The contributing guide is available here: https://github.com/OpenLineage/OpenLineage/blob/main/CONTRIBUTING.md Here is an example proposal for adding a new facet: https://github.com/OpenLineage/OpenLineage/issues/9

Welcome to the newly joined members 🙂 👋

Hello! Airflow PMC member here. Super interested in this effort

I'm joining this slack now, but I'm basically done for the year, so will investigate proposals etc next year

Hey all 👋 Super curious what people's thoughts are on the best way for data quality tools i.e. Great Expectations to integrate with OpenLineage. Probably a Dataset level facet of some sort (from the 25 minutes of deep spec knowledge I have 😆), but curious if that's something being worked on? @Abe Gong

*Thread Reply:* The initial OpenLineage spec is pretty explicit about linking metadata primarily to execution of specific tasks, which is appropriate for ValidationResults in Great Expectations

*Thread Reply:* There isn’t as strong a concept of persistent data objects (e.g. a specific table, or batches of data from a specific table)

*Thread Reply:* (In the GE ecosystem, we call these DataAssets and Batches)

*Thread Reply:* This is also an important conceptual unit, since it’s the level of analysis where Expectations and data docs would typically attach.

*Thread Reply:* @James Campbell and I have had some productive conversations with @Julien Le Dem and others about this topic

*Thread Reply:* Yep! The next step will be to open a few github issues with proposals to add to or amend the spec. We would probably start with a Descriptive Dataset facet of a dataset profile (or dataset update profile). There are other aspects to clarify as well as @Abe Gong is explaining above.

Also interesting to see where this would hook into Dagster. Because one of the many great features of Dagster IMO is it let you do stuff like this (without a formal spec albeit). An OpenLineageMaterialization could be interesting

*Thread Reply:* Totally! We had a quick discussion with Dagster. Looking forward to proposals along those lines.

Congrats @Julien Le Dem @Willy Lulciuc and team on launching OpenLineage!

*Thread Reply:* Thanks, @Harikiran Nayak! It’s amazing to see such interest in the community on defining a standard for lineage metadata collection.

*Thread Reply:* Yep! Its a validation that the problem is real!

Hey folks! Worked on a variety of lineage problems across domains. Super excited about this initiative!

*Thread Reply:* What are you current use cases for lineage?

(for review) Proposal issue template: https://github.com/OpenLineage/OpenLineage/pull/11

for people interested, <#C01EB6DCLHX|github-notifications> has the github integration that will notify of new PRs …

👋 Hello! I'm currently working on lineage systems @ Datadog. Super excited to learn more about this effort

*Thread Reply:* Would you mind sharing your main use cases for collecting lineage?

Hi! I’m also working on a similar topic for some time. Really looking forward to having these ideas standardized 🙂

I would be interested to see how to extend this to dashboards/visualizations. If that still falls with the scope of this project.

*Thread Reply:* Definitely, each dashboard should become a node in the lineage graph. That way you can understand all the dependencies of a given dashboard. SOme example of interesting metadata around this: is the dashboard updated in a timely fashion (data freshness); is the data correct (data quality)? Observing changes upstream of the dashboard will provide insights to what’s hapening when freshness or quality suffer

*Thread Reply:* 100%. On a granular scale, the difference between a visualization and dashboard can be interesting. One visualization can be connected to multiple dashboards. But of course this depends on the BI tool, Redash would be an example in this case.

*Thread Reply:* We would need to decide how to model those things. Possibly as a Job type for dashboard and visualization.

*Thread Reply:* It could be. Its interesting in Redash for example you create custom queries that run at certain intervals to produce the data you need to visualize. Pretty much equivalent to job. But you then build certain visualizations off of that “job”. Then you build dashboards off of visualizations. So you could model it as an job or it could make sense for it to be more modeled like an dataset.

Thats the hard part of this. How to you model a visualization/dashboard to all the possible ways they can be created since it differs depending on how the tool you use abstracts away creating an visualization.

👋 Hi everyone!

*Thread Reply:* Part of my role at Netflix is to oversee our data lineage story so very interested in this effort and hope to be able to participate in its success

A reference implementation of the OpenLineage initial spec is in progress in Marquez: https://github.com/MarquezProject/marquez/pull/880

*Thread Reply:* The OpenLineage reference implementation in Marquez will be presented this morning Thursday (01/07) at 10AM PST, at the Marquez Community meeting.

When: Thursday, January 7th at 10AM PST Where: https://us02web.zoom.us/j/89344845719?pwd=Y09RZkxMZHc2U3pOTGZ6SnVMUUVoQT09

*Thread Reply:* Marquez now has a reference implementation of the initial OpenLineage spec

👋 Hi everyone! I'm one of the co-founder at data.world and looking forward to hanging out here

👋 Hi everyone! I was looking for the roadmap and don't see any. Does it exist?

*Thread Reply:* There’s no explicit roadmap so far. With the initial spec defined and the reference implementation implemented, next steps are to define more facets (for example, data shape, dataset size, etc), provide clients to facilitate integrations (java, python, …), implement more integrations (Spark in the works). Members of the community are welcome to drive their own initiatives around the core spec. One of the design goals of the facet is to enable numerous and independant parallel efforts

*Thread Reply:* Is there something you are interested about in particular?

I have opened a proposal to move the spec to JSONSchema, this will make it more focused and decouple from http: https://github.com/OpenLineage/OpenLineage/issues/15

Here is a PR with the corresponding change: https://github.com/OpenLineage/OpenLineage/pull/17

Really excited to see this project! I am curious what's the current state and the roadmap of it?

*Thread Reply:* You can find the initial spec here: https://github.com/OpenLineage/OpenLineage/blob/main/spec/OpenLineage.md The process to contribute to the model is described here: https://github.com/OpenLineage/OpenLineage/blob/main/CONTRIBUTING.md In particular, now we’d want to contribute more facets and integrations. Marquez has a reference implementation: https://github.com/MarquezProject/marquez/pull/880 On the roadmap: • define more facets: data profile, etc • more integrations • java/python client You can see current discussions here: https://github.com/OpenLineage/OpenLineage/issues

For people curious about following github activity you can subscribe to: <#C01EB6DCLHX|github-notifications>

*Thread Reply:* It is not on general, as it can be a bit noisy

Random-ish question: why is producer and schemaURL nested under nominalTime facet in the spec for postRunStateUpdate? It seems like the producer of its metadata isn’t related to the time of the lineage event?

*Thread Reply:* Hi @Zachary Friedman! I replied bellow. https://openlineage.slack.com/archives/C01CK9T7HKR/p1612918909009900

producer and schemaURL are defined in the BaseFacet type and therefore all facets (including nominalTime) have it.

• The producer is an identifier for the code that produced the metadata. The idea is that different facets in the same event can be produced by different libraries. For example In a Spark integration, Iceberg could emit it’s own facet in addition to other facets. The producer identifies what produced what.

• The _schemaURL is the identifier of the version of the schema for a given facet. Similarly an event could contain a mixture of Core facets from the spec as well as custom facets. This makes explicit what the definition for this facet is.

As discussed previously, I have separated a Json Schema spec for the OpenLineage events from the OpenAPI spec defining a HTTP endpoint: https://github.com/OpenLineage/OpenLineage/pull/17

*Thread Reply:* Feel free to comment, this is ready to merge

*Thread Reply:* Thanks, Julien. The new spec format looks great 👍

And the corresponding code generator to start the java (and other languages) client: https://github.com/OpenLineage/OpenLineage/pull/18

those are merged, we now have a jsonschema, an openapi spec that extends it and a generated java model

Following up on a previous discussion: This proposal and the accompanying PR add the notion of InputFacets and OutputFacets: https://github.com/OpenLineage/OpenLineage/issues/20 In summary, we are collecting metadata about jobs and datasets. At the Job level, when it’s fairly static metadata (not changing every run, like the current code version of the job) it goes in a JobFacet. When it is dynamic and changes every run (like the schedule time of the run), it goes in a RunFacet. This proposal is adding the same notion at the Dataset level: when it is static and doesn’t change every run (like the dataset schema) it goes in a Dataset facet. When it is dynamic and changes every run (like the input time interval of the dataset being read, or the statistics of the dataset being written) it goes in an inputFacet or an outputFacet. This enables Job and Dataset versioning logic, to keep track of what changes in the definition of something vs runtime changes

*Thread Reply:* @Kevin Mellott and @Petr Šimeček Thanks for the confirmation on this slack message. To make your comment visible to the wider community, please chime in on the github issue as well: https://github.com/OpenLineage/OpenLineage/issues/20 Thank you.

*Thread Reply:* The PR is out for this: https://github.com/OpenLineage/OpenLineage/pull/23

Hi, I am really interested in this project and Marquez. I am a bit not clear about the differences and relationship between those two projects. As my understanding, OpenLineage provides an api specification for other tools running jobs (e.g. Spark, Airflow) to send out an event to update the run state of the job, then for example Marquez can be the destination for those events and show the data lineage from those run state updates. When you are saying there is an reference implementation of the OpenLineage spec in Marquez, do you mean there is an /lineage endpoint implemented in the Marquez api https://github.com/MarquezProject/marquez/blob/main/api/src/main/java/marquez/api/OpenLineageResource.java? Then my question is what is next step after Marquez has this api? How does Marquez use that endpoint to integrate with airflow for example? I did not find the usage of that endpoint in Marquez project. The library marquez-airflow which integrates Airflow with Marquez seems like only use the other marquez apis to build the data lineage. Or did I misunderstand something? Thank you very much!

*Thread Reply:* Okay, I found the spark integration in Marquez calls the /lineage endpoint. But I am still curious about the future plan to integrate with other tools, like airflow?

*Thread Reply:* Just restating some of my answers from teh marquez slack for the benefits of folks here.

• OpenLineage defines the schema to collect metadata • Marquez has a /lineage endpoint implementing the OpenLineage spec to receive this metadata, implemented by the OpenLineageResource you pointed out • In the future other projects will also have OpenLineage endpoints to receive this metadata • The Marquez Spark integration produces OpenLineage events: https://github.com/MarquezProject/marquez/tree/main/integrations/spark • The Marquez airflow integration still uses the original marquez api but will be migrated to open lineage. • All new integrations will use OpenLineage metadata

Hi Everyone. Just got started with the Marquez REST API and a little bit into the Open Lineage aspects. Very easy to use. Great work on the curl examples for getting started. I'm working with Postman and am happy to share a collection I have once I finish testing. A question about tags --- are there plans for a "post new tag" call in the API? ...or maybe I missed it. Thx. --ernie

*Thread Reply:* I forgot to reply in thread 🙂 https://openlineage.slack.com/archives/C01CK9T7HKR/p1614725462008300

OpenLineage doesn’t have a Tag facet yet (but tags are defined in the Marquez api). Feel free to open a proposal on the github repo. https://github.com/OpenLineage/OpenLineage/issues/new/choose

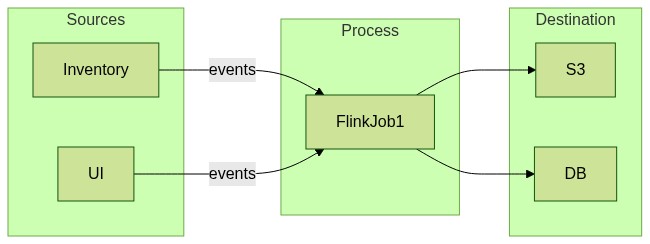

Hey everyone. What's the story for stream processing (like Flink jobs) for OpenLineage?

It does not fit cleanly with runEvent model, which

It is required to issue 1 START event and 1 of [ COMPLETE, ABORT, FAIL ] event per run.

as unbounded stream jobs usually do not complete.

I'd imagine few "workarounds" that work for some cases - for example, imagine a job calculating hourly aggregations of transactions and dumpling them into parquet files for further analysis. The job could issue OTHER event type adding additional output dataset every hour. Another option would be to create new "run" every hour, just indicating the added data.

*Thread Reply:* Ha, I signed up just to ask this precise question!

*Thread Reply:* I’m still looking into the spec myself. Are we required to have 1 or more runs per Job? Or can a Job exist without a run event?

*Thread Reply:* Run event can be emitted when it starts. and it can stay in RUNNING state unless something happens to the job. Additionally, you could send event periodically as state RUNNING to inform the system that job is healthy.

Similar to @Maciej Obuchowski question about Flink / Streaming jobs - what about Streaming sources (eg: a Kafka topic)? It does fit into the dataset model, more or less. But, has anyone used this yet for a set of streaming sources? Particularly with schema changes over time?

Hi @Maciej Obuchowski and @Adam Bellemare, streaming jobs are meant to be covered by the spec but I agree there are a few details to iron out.

In particular, streaming job still have runs. If they run continuously they do not run forever and you want to track that a job has been started at a point in time with a given version of the code, then stopped and started again after being upgraded for example.

I agree with @Maciej Obuchowski that we would also send OTHER events to keep track of progress.

For example one could track checkpointing this way.

For a Kafka topic you could have streaming dataset specific facets or even Kafka specific facets (ex: list of offsets we stopped reading at, schema id, etc )

*Thread Reply:* That's good idea.

Now I'm wondering - let's say we want to track on which offset checkpoint ended processing. That would mean we want to expose checkpoint id, time, and offset. I suppose we don't want to overwrite previous checkpoint info, so we want to have some collection of data in this facet.

Something like appendable facets would be nice, to just add new checkpoint info to the collection, instead of having to push all the checkpoint infos all the time we just want to add new data point.

*Thread Reply:* Thanks Julien! I will try to wrap my head around some use-cases and see how it maps to the current spec. From there, I can see if I can figure out any proposals

*Thread Reply:* You can use the proposal issue template to propose a new facet for example: https://github.com/OpenLineage/OpenLineage/issues/new/choose

Hi everyone, I just hear about OpenLineage and would like to learn more about it. The talks in the repo explain nicely the purpose and general ideas but I have a couple of questions. Are there any working implementations to produce/consume the spec? Also, are there any discussions/guides standard information, naming conventions, etc. in the facets?

• The Spark integration using OpenLineage: https://github.com/MarquezProject/marquez/tree/main/integrations/spark • in particular: ◦ A simple OpenLineage client (we’re working on adding this to the OpenLineage repo): https://github.com/MarquezProject/marquez/tree/b758751b6c0ba6d2f0da1ba7ec636b73317[…]450/integrations/spark/src/main/java/marquez/spark/agent/client ◦ emitting events: ▪︎ https://github.com/MarquezProject/marquez/blob/b758751b6c0ba6d2f0da1ba7ec636b73317[…]ava/marquez/spark/agent/lifecycle/SparkSQLExecutionContext.java ▪︎ https://github.com/MarquezProject/marquez/blob/b758751b6c0ba6d2f0da1ba7ec636b73317[…]ava/marquez/spark/agent/lifecycle/SparkSQLExecutionContext.java • The Marquez OpenLineage endpoint: https://github.com/MarquezProject/marquez/blob/893beddcb7dbc4d4b7b994f003ce461a478[…]bf466/api/src/main/java/marquez/service/OpenLineageService.java

Marquez has a reference implementation of an OpenLineage endpoint. The Spark integration emits OpenLineage events.

Thank you @Julien Le Dem!!! Will take a close look

Q related to People/Teams/Stakeholders/Owners with regards to Jobs and Datasets (didn’t find anything in search):

Let’s say I have a dataset , and there are a number of other downstream jobs that ingest from it. In the case that the dataset is mutated in some way (or deleted, archived, etc), how would I go about notifying the stakeholders of that set about the changes?

Just to be clear, I’m not concerned about the mechanics of doing this, just that there is someone that needs to be notified, who has self-registered on this set.

Similarly, I want to manage the datasets I am concerned about , where I can grab a list of all the datasets I tagged myself on.

This seems to suggest that we could do with additional entities outside of Dataset, Run, Job. However, at the same time, I can see how this can lead to an explosion of other entities. Any thoughts on this particular domain? I think I could achieve something similar with aspects, but this would require that I update the aspect on each entity if I want to wholesale update the user contact, say their email address.

Has anyone else run into something like this? Have you any advice? Or is this something that may be upcoming in the spec?

*Thread Reply:* One thing we were considering is just adding these in as Facets ( Tags as per Marquez), and then plugging into some external people managing system. However, I think the question can be generalized to “should there be some sort of generic entity that can enable relationships between itself and Datasets, Jobs, Runs) as part of an integration element?

*Thread Reply:* That’s a great topic of discussion. I would definitely use the OpenLineage facets to capture what you describe as aspect above. The current Marquez model has a simple notion of ownership at the namespace model but this need to be extended to enable use cases you are describing (owning a dataset or a job) . Right now the owner is just a generic identifier as a string (a user id or a group id for example). Once things are tagged (in some way), you can use the lineage API to find all the downstream or upstream jobs and datasets. In OpenLineage I would start by being able to capture the owner identifier in a facet with contact info optional if it’s available at runtime. It will have the advantage of keeping track of how that changed over time. This definitely deserves its own discussion.

*Thread Reply:* And also to make sure I understand your use case, you want to be able to notify the consumers of a dataset that it is being discontinued/replaced/… ? What else are you thinking about?

*Thread Reply:* Let me pull in my colleagues

*Thread Reply:* 👋 Hi Julien. I’m Olessia, I’m working on the metadata collection implementation with Adam. Some thought on this:

*Thread Reply:* To start off, we’re thinking that there often isn’t a single owner, but rather a set of Stakeholders that evolve over time. So we’d like to be able to attach multiple entries, possibly of different types, to a Dataset. We’re also thinking that a dataset should have at least one owner. So a few things I’d like to confirm/discuss options:

- If I were to stay true to the spec as it’s defined atm I wouldn’t be able to add a required facet. True/false?

- According to the readme, “...emiting a new facet with the same name for the same entity replaces the previous facet instance for that entity entirely”. If we were to store multiple stakeholders, we’d have a field “stakeholders” and its value would be a list? This would make queries involving stakeholders not very straightforward. If the facet is overwritten every time, how do I a) add individuals to the list b) track changes to the list over time. Let me know what I’m missing, because based on what you said above tracking facet changes over time is possible.

- Run events are issued by a scheduler. Why should it be in the domain of the scheduler to know the entire list of Stakeholders?

- I noticed that Marquez has separate endpoints to capture information about Datasets, and some additional information beyond what’s described in the spec is required. In this context, we could add a required Stakeholder facets on a Dataset, and potentially even additional end points to add and remove Stakeholders. Is that a valid way to go about this, in your opinion?

Curious to hear your thoughts on all of this!

*Thread Reply:* > To start off, we’re thinking that there often isn’t a single owner, but rather a set of Stakeholders that evolve over time. So we’d like to be able to attach multiple entries, possibly of different types, to a Dataset. We’re also thinking > that a dataset should have at least one owner. So a few things I’d like to confirm/discuss options: > -> If I were to stay true to the spec as it’s defined atm I wouldn’t be able to add a required facet. True/false? Correct, The spec defines what facets looks like (and how you can make your own custom facets) but it does not make statements about whether facets are required. However, you can have your own validation and make certain things required if you wish to on the client side? > - According to the readme, “...emiting a new facet with the same name for the same entity replaces the previous facet instance for that entity entirely”. If we were to store multiple stakeholders, we’d have a field “stakeholders” and its value would be a list? Yes, I would indeed consider such a facet on the dataset with the stakeholder.

> This would make queries involving stakeholders not very straightforward. If the facet is overwritten every time, how do I > a) add individuals to the list You would provide the new list of stake holders. OpenLineage standardizes lineage collection and defines a format for expressing metadata. Marquez will keep track of how metadata has evolved over time.

> b) track changes to the list over time. Let me know what I’m missing, because based on what you said above tracking facet changes over time is possible. Each event is an observation at a point in time. In a sense they are each immutable. There’s a “current” version but also all the previous ones stored in Marquez. Marquez stores each version of a dataset it received through OpenLineage and exposes an API to see how that evolved over time.

> - Run events are issued by a scheduler. Why should it be in the domain of the scheduler to know the entire list of Stakeholders? The scheduler emits the information that it knows about. For example: “I started this job and it’s reading from this dataset and is writing to this other dataset.” It may or may not be in the domain of the scheduler to know the list of stakeholders. If not then you could emit different types of events to add a stakeholder facet to a dataset. We may want to refine the spec for that. Actually I would be curious to hear what you think should be the source of truth for stakeholders. It is not the intent to force everything coming from the scheduler.

- example 1: stakeholders are people on call for the job, they are defined as part of the job and that also enables alerting

- example 2: stakeholders are consumers of the jobs: they may be defined somewhere else

> - I noticed that Marquez has separate endpoints to capture information about Datasets, and some additional information beyond what’s described in the spec is required. In this context, we could add a required Stakeholder facets on a Dataset, and potentially even additional end points to add and remove Stakeholders. Is that a valid way to go about this, in your opinion?

*Thread Reply:* Marquez existed before OpenLineage. In particular the /run end-point to create and update runs will be deprecated as the OpenLineage /lineage endpoint replaces it. At the moment we are mapping OpenLineage metadata to Marquez. Soon Marquez will have all the facets exposed in the Marquez API. (See: https://github.com/MarquezProject/marquez/pull/894/files) We could make Marquez Configurable or Pluggable for validation purposes. There is already a notion of LineageListener for example. Although Marquez collects the metadata. I feel like this validation would be better upstream or with some some other mechanism. The question is when do you create a dataset vs when do you become a stakeholder? What are the various stakeholder and what is the responsibility of the minimum one stakeholder? I would probably make it required to deploy the job that the stakeholder is defined. This would apply to the output dataset and would be collected in Marquez.

In general, you are very welcome to make suggestion on additional endpoints for Marquez and I’m happy to discuss this further as those ideas are progressing.

> Curious to hear your thoughts on all of this! Thanks for taking the time!

*Thread Reply:* https://openlineage.slack.com/archives/C01CK9T7HKR/p1621887895004200

Thanks for the Python client submission @Maciej Obuchowski https://github.com/OpenLineage/OpenLineage/pull/34

I also have added a spec to define a standard naming policy. Please review: https://github.com/OpenLineage/OpenLineage/pull/31/files

We now have a python client! Thanks @Maciej Obuchowski

Question, what do you folks see as the canonical mechanism for receiving OpenLineage events? Do you see an agent like statsd? Or do you see this as purely an API spec that services could implement? Do you see producers of lineage data writing code to send formatted OpenLineage payloads to arbitrary servers that implement receipt of these events? Curious what the long-term vision is here related to how an ecosystem of producers and consumers of payloads would interact?

*Thread Reply:* Marquez is the reference implementation for receiving events and tracking changes. But the definition of the API let’s other receive them (and also enables using openlineage events to sync between systems)

*Thread Reply:* In particular, Egeria is involved in enabling receiving and emitting openlineage

*Thread Reply:* Thanks @Julien Le Dem. So to get specific, if dbt were to emit OpenLineage events, how would this work? Would dbt Cloud hypothetically allow users to configure an endpoint to send OpenLineage events to, similar in UI implementation to configuring a Stripe webhook perhaps? And then whatever server the user would input here would point to somewhere that implements receipt of OpenLineage payloads? This is all a very hypothetical example, but trying to ground it in something I have a solid mental model for.

*Thread Reply:* hypothetically speaking, that all sounds right. so a user, who, e.g., has a dbt pipeline and an AWS glue pipeline could configure both of those projects to point to the same open lineage service and get their entire lineage graph even if the two pipelines aren't connected.

*Thread Reply:* Yeah, OpenLineage events need to be published to a backend (can be Kafka, can be a graphDB, etc). Your Stripe webhook analogy is aligned with how events can be received. For example, in Marquez, we expose a /lineage endpoint that consumes OpenLineage events. We then map an OpenLineage event to the Marquez model (sources, datasets, jobs, runs) that’s persisted in postgres.

*Thread Reply:* sorry, I was away last week. Yes that sounds right.

Hi everyone, I just started discovering OpenLineage and Marquez, it looks great and the quick-start tutorial is very helpful! One question though, I pushed some metadata to Marquez using the Lineage POST endpoint, and when I try to confirm that everything was created using Marquez REST API, everything is there ... but I don't see these new objects in the Marquez UI... what is the best way how to investigate where the issue is?

*Thread Reply:* Welcome, @Jakub Moravec (IBM/Manta) 👋 . Given that you're able to retrieve metadata using the marquezAPI, you should be able to also view dataset and job metadata in the UI. Mind using the search bar in the top right-hand corner in the UI to see if your metadata is searchable? The UI only renders jobs and datasets that are connected in the lineage graph. We're working towards a more general metadata exploration experience, but currently the lineage graph is the main experience.

Hi friends, we're exploring OpenLineage and while building out integration for existing systems we realized there is no obvious way for an input to specify what "version" of that dataset is being consumed. For example, we have a job that rolls up a variable number of what OpenLineage calls dataset versions. By specifying only that dataset, we can't represent the specific instances of it that are actually rolled up. We think that would be a very important part of the lineage graph.

Are there any thoughts on how to address specific dataset versions? Is this where custom input facets would come to play?

Furthermore, based on the spec, it appears that events can provide dataset facets for both inputs and outputs and this seems to open the door to race conditions in which two runs concurrently create dataset versions of a dataset. Is this where the eventTime field is supposed to be used?

*Thread Reply:* Your intuition is right here. I think we should define an input facet that specifies which dataset version is being read. Similarly you would have an output facet that specifies what version is being produced. This would apply to storage layers like Deltalake and Iceberg as well.

*Thread Reply:* Regarding the race condition, input and output facets are attached to the run. The version of the dataset that was read is an attribute of a run and should not modify the dataset itself.

*Thread Reply:* See the Dataset description here: https://github.com/OpenLineage/OpenLineage/blob/main/spec/OpenLineage.md#core-lineage-model

Hi everyone! I’m exploring what existing, open-source integrations are available, specifically for Spark, Airflow, and Trino (PrestoSQL). My team is looking both to use and contribute to these integrations. I’m aware of the integration in the Marquez repo: • Spark: https://github.com/MarquezProject/marquez/tree/main/integrations/spark • Airflow: https://github.com/MarquezProject/marquez/tree/main/integrations/airflow Are there other efforts I should be aware of, whether for these two or for Trino? Thanks for any information!

*Thread Reply:* I think for Trino integration you'd be looking at writing a Trino extractor if I'm not mistaken, yes?

*Thread Reply:* But extractor would obviously be at the Marquez layer not OpenLineage

*Thread Reply:* And hopefully the metadata you'd be looking to extract from Trino wouldn't have any connector-specific syntax restrictions.

Hey all! Right now I am working on getting OpenLineage integrated with some microservices here at Northwestern Mutual and was looking for some advice. The current service I am trying to integrate it with moves files from one AWS S3 bucket to another so i was hoping to track that movement with OpenLineage. However by my understanding the inputs that would be passed along in a runEvent are meant to be datasets that have schema and other properties. But I wanted to have that input represent the file being moved. Is this a proper usage of Open Lineage? Or is this a use case that is still being developed? Any and all help is appreciated!

*Thread Reply:* This is a proper usage. That schema is optional if it’s not available.

*Thread Reply:* You would model it as a job reading from a folder (the input dataset) in the input bucket and writing to a folder (the output dataset) in the output bucket

*Thread Reply:* This is similar to how this is modeled in the spark integration (spark job reading and writing to s3 buckets)

*Thread Reply:* for reference: getting the urls for the inputs: https://github.com/MarquezProject/marquez/blob/c5e5d7b8345e347164aa5aa173e8cf35062[…]marquez/spark/agent/lifecycle/plan/HadoopFsRelationVisitor.java

*Thread Reply:* getting the output URL: https://github.com/MarquezProject/marquez/blob/c5e5d7b8345e347164aa5aa173e8cf35062[…]ark/agent/lifecycle/plan/InsertIntoHadoopFsRelationVisitor.java

*Thread Reply:* See the spec (comments welcome) for the naming of S3 datasets: https://github.com/OpenLineage/OpenLineage/pull/31/files#diff-e3a8184544e9bc70d8a12e76b58b109051c182a914f0b28529680e6ced0e2a1cR87

*Thread Reply:* Hey Julien, thank you so much for getting back to me. I'll take a look at the documentation/implementations you've sent me and will reach out if I have anymore questions. Thanks again!

*Thread Reply:* @Julien Le Dem I left a quick comment on that spec PR you mentioned. Just wanted to let you know.

Hello all. I was reading through the OpenLineage documentation on GitHub and noticed a very minor typo (an instance where and should have been an). I was just about to create a PR for it but wanted to check with someone to see if that would be something that the team is interested in.

Thanks for the tool, I'm looking forward to learning more about it.

*Thread Reply:* Thank you! Please do fix typos, I’ll approve your PR.

*Thread Reply:* No problem. Here's the PR. https://github.com/OpenLineage/OpenLineage/pull/47

*Thread Reply:* Once I fixed the ones I saw I figured "Why not just run it through a spell checker just in case... " and found a few additional ones.

For your enjoyment, @Julien Le Dem was on the Data Engineering Podcast talking about OpenLineage!

https://www.dataengineeringpodcast.com/openlineage-data-lineage-specification-episode-187/

Also happened yesterday: OpenLineage being accepted by the LFAI&Data.

I have created a channel to discuss <#C022MMLU31B|user-generated-metadata> since this came up in a few discussions.

hey guys, does anyone have any sample openlineage schemas for S3 please? potentially including facets for attributes in a parquet file? that would help heaps thanks. i am trying to slowly bring in a common metadata interface and this will help shape some of the conversations 🙂 with a move to marquez/datahub et al over time

*Thread Reply:* We currently don’t have S3 (or distributed filesystem specific facets) at the moment, but such support would be a great addition! @Julien Le Dem would be best to answer if any work has been done in this area 🙂

*Thread Reply:* Also, happy to answer any Marquez specific questions, @Jonathon Mitchal when you’re thinking of making the move. Marquez supports OpenLineage out of the box 🙌

*Thread Reply:* @Jonathon Mitchal You can follow the naming strategy here for referring to a S3 dataset: https://github.com/OpenLineage/OpenLineage/blob/main/spec/Naming.md#s3

*Thread Reply:* There is no facet yet for the attributes of a Parquet file. I can give you feedback if you want to start defining one. https://github.com/OpenLineage/OpenLineage/blob/main/CONTRIBUTING.md#proposing-changes

*Thread Reply:* Adding Parquet metadata as a facet would make a lot of sense. It is mainly a matter of specifying what the json would look like

*Thread Reply:* for reference the parquet metadata is defined here: https://github.com/apache/parquet-format/blob/master/src/main/thrift/parquet.thrift

*Thread Reply:* Thats awesome, thanks for the guidance Willy and Julien ... will report back on how we get on

hi all! just wanted to introduce myself, I'm the Head of Data at Hightouch.io, we build reverse etl pipelines from the warehouse into various destinations. I've been following OpenLineage for a while now and thought it would be nice to build and expose our runs via the standard and potentially save that back to the warehouse for analysis/alerting. Really interesting concept, looking forward to playing around with it

*Thread Reply:* Welcome! Let use know if you have any questions

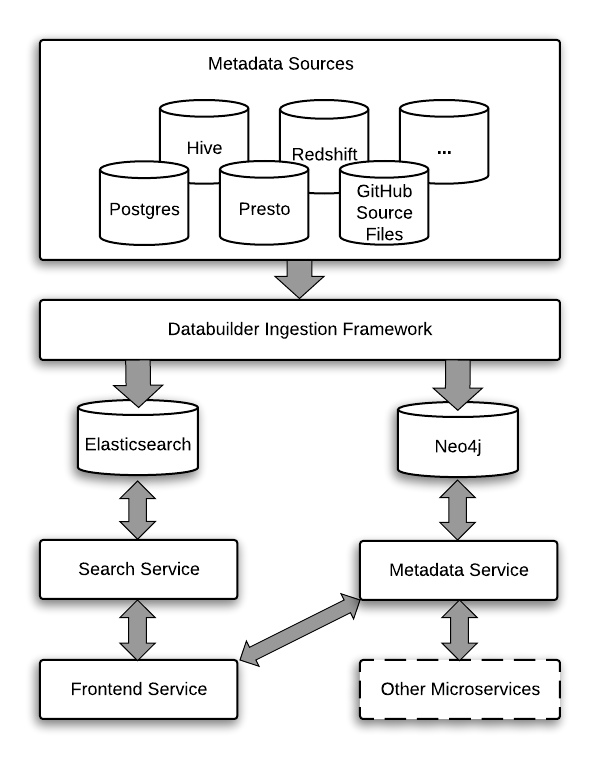

Hi all! I have a noob question. As I understand it, one of the main purposes of OpenLineage is to avoid runaway proliferation of bespoke connectors for each data lineage/cataloging/provenance tool to each data source/job scheduler/query engine etc. as illustrated in the problem diagram from the main repo below.

My understanding is that instead, things push to OpenLineage which provides pollable endpoints for metadata tools.

I’m looking at Amundsen, and it seems to have bespoke connectors, but these are pull-based - I don’t need to instrument my data resources to push to Amundsen, I just need to configure Amundsen to poll my data resources (e.g. the Postgres metadata extractor here).

Can OpenLineage do something similar where I can just point it at something to extract metadata from it, rather than instrumenting that thing to push metadata to OpenLineage? If not, I’m wondering why?

Is it the case that Open Lineage defines the general framework but doesn’t actually enforce push or pull-based implementations, it just so happens that the reference implementation (Marquez) uses push?

*Thread Reply:* > Is it the case that Open Lineage defines the general framework but doesn’t actually enforce push or pull-based implementations, it just so happens that the reference implementation (Marquez) uses push? Yes, at core OpenLineage just enforces format of the event. We also aim to provide clients - REST, later Kafka, etc. and some reference implementations - which are now in Marquez repo. https://raw.githubusercontent.com/OpenLineage/OpenLineage/main/doc/Scope.png

There are several differences between push and poll models. Most important one is that with push model, latency between your job and emitting OpenLineage events is very low. With some systems, with internal, push based model you have more runtime metadata available than when looking from outside. Another one would be that naive poll implementation would need to "rebuild the world" on each change. There are also disadvantages, such as that usually, it's easier to write plugin that extracts data from outside the system than hooking up to the internals.

Integration with Amundsen specifically is planned. Although, right now it seems to me that way to do it is to bypass the databuilder framework and push directly to underlying database, such as Neo4j, or make Marquez backend for Metadata Service: https://raw.githubusercontent.com/amundsen-io/amundsen/master/docs/img/Amundsen_Architecture.png

*Thread Reply:* This is really helpful, thank you @Maciej Obuchowski!

*Thread Reply:* Similar to what you say about push vs pull, I found DataHub’s comment to be interesting yesterday: > Push is better than pull: While pulling metadata directly from the source seems like the most straightforward way to gather metadata, developing and maintaining a centralized fleet of domain-specific crawlers quickly becomes a nightmare. It is more scalable to have individual metadata providers push the information to the central repository via APIs or messages. This push-based approach also ensures a more timely reflection of new and updated metadata.

*Thread Reply:* yes. You can also “pull-to-push” for things that don’t push.

*Thread Reply:* @Maciej Obuchowski any particular reason for bypassing databuilder and go directly to neo4j? By design databuilder is supposed to be very abstract so any kind of backend can be used with Amundsen. Currently there are at least 4 and neo4j is just one of them.

*Thread Reply:* Databuilder's pull model is very different than OpenLineage's push model, where the events are generated while the dataset itself is generated.

So, how would you see using it? Just to proxy the events to concrete search and metadata backend?

I'm definitely not an Amundsen expert, so feel free to correct me if I'm getting it wrong.

*Thread Reply:* @Mariusz Górski my slide that Maciej is referring to might be a bit misleading. The Amundsen integration does not exist yet. Please add your input in the ticket: https://github.com/OpenLineage/OpenLineage/issues/86

*Thread Reply:* thanks Julien! will take a look

@here Hello, My name is Kedar Rajwade. I happened to come across the OpenLineage project and it looks quite interesting. Is there some kind of getting start guide that I can follow. Also are there any weekly/bi-weekly calls that I can attend to know the current/future plans ?

*Thread Reply:* Welcome! You can look here: https://github.com/OpenLineage/OpenLineage/blob/main/CONTRIBUTING.md

*Thread Reply:* We’re starting a monthly call, I will publish more details here

*Thread Reply:* Do you have a specific use case in mind?

The first instance of the OpenLineage Monthly meeting is tomorrow June 9 at 9am PT: https://calendar.google.com/event?action=TEMPLATE&tmeid=MDRubzk0cXAwZzA4bXRmY24yZjBkdTZzbDNfMjAyMTA2MDlUMTYwMDAwWiBqdWxpZW5AZGF0YWtpbi5jb20&tmsrc=julien%40datakin.com&scp=ALL|https://calendar.google.com/event?action=TEMPLATE&tmeid=MDRubzk0cXAwZzA4bXRmY24yZjBkdT[…]qdWxpZW5AZGF0YWtpbi5jb20&tmsrc=julien%40datakin.com&scp=ALL

*Thread Reply:* Hey @Julien Le Dem, I can’t add a link to my calendar… Can you send an invite?

*Thread Reply:* Will do. Also if you send your email in dm you can get added to the invite

*Thread Reply:* You can find the invitation on the tsc mailing list: https://lists.lfaidata.foundation/g/openlineage-tsc/topic/invitation_openlineage/83423919?p=,,,20,0,0,0::recentpostdate%2Fsticky,,,20,2,0,83423919

*Thread Reply:* @Julien Le Dem Can't access the calendar.

*Thread Reply:* Can you please share the meeting details

*Thread Reply:* The calendar invite says 9am PDT, not 10am. Which is right?

*Thread Reply:* I have posted the notes on the wiki (includes link to recording) https://wiki.lfaidata.foundation/display/OpenLineage/Monthly+meeting+archive

Hi! Are there some 'close-to-real' sample events available to build off and compare to? I'd like to make sure what I'm outputting makes sense but it's hard when only comparing to very synthetic data.

*Thread Reply:* We’ve recently worked on a getting started guide for OpenLineage that we’d like to publish on the OpenLineage website. That should help with making things a bit more clear on usage. @Ross Turk / @Julien Le Dem might know of when that might become available. Otherwise, happy to answer any immediate questions you might have about posting/collecting OpenLineage events

*Thread Reply:* Here's a sample of what I'm producing, would appreciate any feedback if it's on the right track. One of our challenges is that 'dataset' is a little loosely defined for us as outputs since we take data from a warehouse/database and output to things like Salesforce, Airtable, Hubspot and even Slack.

{

eventType: 'START',

eventTime: '2021-06-09T08:45:00.395+00:00',

run: { runId: '2821819' },

job: {

namespace: '<hightouch://my-workspace>',

name: '<hightouch://my-workspace/sync/123>'

},

inputs: [

{

namespace: '<snowflake://abc1234>',

name: '<snowflake://abc1234/my_source_table>'

}

],

outputs: [

{

namespace: '<salesforce://mysf_instance.salesforce.com>',

name: 'accounts'

}

],

producer: 'hightouch-event-producer-v.0.0.1'

}

{

eventType: 'COMPLETE',

eventTime: '2021-06-09T08:45:30.519+00:00',

run: { runId: '2821819' },

job: {

namespace: '<hightouch://my-workspace>',

name: '<hightouch://my-workspace/sync/123>'

},

inputs: [

{

namespace: '<snowflake://abc1234>',

name: '<snowflake://abc1234/my_source_table>'

}

],

outputs: [

{

namespace: '<salesforce://mysf_instance.salesforce.com>',

name: 'accounts'

}

],

producer: 'hightouch-event-producer-v.0.0.1'

}

*Thread Reply:* One other question I have is really around how customers might take the metadata we emit at Hightouch and integrate that with OpenLineage metadata emitted from other tools like dbt, Airflow, and other integrations to create a true lineage of their data.

For example, if the data goes from S3 -> Snowflake via Airflow and then from Snowflake -> Salesforce via Hightouch, this would mean both Airflow/Hightouch would need to define the Snowflake dataset in exactly the same way to get the benefits of lineage?

*Thread Reply:* Hey, @Dejan Peretin! Sorry for the late replay here! Your OL events look solid and only have a few of suggestions:

- I would use a valid UUID for the run ID as the spec will standardize on that type, see https://github.com/OpenLineage/OpenLineage/pull/65

- You don’t need to provide the input dataset again on the

COMPLETEevent as the input datasets have already been associated with the run ID - For the producer, I’d recommend using a link to the producer source code version to link the producer version with the OL event that was emitted.

*Thread Reply:* You can now reference our OL getting started guide for a close-to-real example 🙂 , see http://openlineage.io/getting-started

*Thread Reply:* > … this would mean both Airflow/Hightouch would need to define the Snowflake dataset in exactly the same way to get the benefits of lineage? Yes, the dataset and the namespace that it was registered under would have to be the same to properly build the lineage graph. We’re working on defining unique dataset names and have made some good progress in this area. I’d suggest reviewing the OL naming conventions if you haven’t already: https://github.com/OpenLineage/OpenLineage/blob/main/spec/Naming.md

*Thread Reply:* Thanks! I'm really excited to see what the future holds, I think there are so many great possibilities here. Will be keeping a watchful eye. 🙂

Hey everyone! I've been running into a minor OpenLineage issue and I was curious if anyone had any advice. So according to OpenLineage specs its suggested that for a dataset coming from S3 that its namespace be in the form of s3://<bucket>. We have implemented our code to do so and RunEvents are published without issue but when trying to retrieve the information of this RunEvent (like the job) I am unable to retrieve it based on namespace from both /api/v1/namespaces/s3%3A%2F%2F<bucket name> (encoding since : and / are special characters in URL) and the beta endpoint of /api/v1-beta/lineage?nodeId=<dataset>:<namespace>:<name> and instead get a 400 error with a "Ambiguous Segment in URI" message.

Any and all advice would be super helpful! Thank you so much!

*Thread Reply:* Sounds like problem is with Marquez - might be worth to open issue here: https://github.com/MarquezProject/marquez/issues

*Thread Reply:* Thank you! Will do.

I have opened a proposal for versioning and publishing the spec: https://github.com/OpenLineage/OpenLineage/issues/63

We have a nice OpenLineage website now. https://openlineage.io/ Thank you to contributors: @Ross Turk @Willy Lulciuc @Michael Collado!

Hi everyone! Im trying to run a spark job with openlineage and marquez...But Im getting some errors

*Thread Reply:* Here is the error...

21/06/20 11:02:56 WARN ArgumentParser: missing jobs in [, api, v1, namespaces, spark_integration] at 5

21/06/20 11:02:56 WARN ArgumentParser: missing runs in [, api, v1, namespaces, spark_integration] at 7

21/06/20 11:03:01 ERROR AsyncEventQueue: Listener SparkListener threw an exception

java.lang.NullPointerException

at marquez.spark.agent.SparkListener.onJobEnd(SparkListener.java:165)

at org.apache.spark.scheduler.SparkListenerBus$class.doPostEvent(SparkListenerBus.scala:39)

at org.apache.spark.scheduler.AsyncEventQueue.doPostEvent(AsyncEventQueue.scala:37)

at org.apache.spark.scheduler.AsyncEventQueue.doPostEvent(AsyncEventQueue.scala:37)

at org.apache.spark.util.ListenerBus$class.postToAll(ListenerBus.scala:91)

at <a href="http://org.apache.spark.scheduler.AsyncEventQueue.org">org.apache.spark.scheduler.AsyncEventQueue.org</a>$apache$spark$scheduler$AsyncEventQueue$$super$postToAll(AsyncEventQueue.scala:92)

at org.apache.spark.scheduler.AsyncEventQueue$$anonfun$org$apache$spark$scheduler$AsyncEventQueue$$dispatch$1.apply$mcJ$sp(AsyncEventQueue.scala:92)

at org.apache.spark.scheduler.AsyncEventQueue$$anonfun$org$apache$spark$scheduler$AsyncEventQueue$$dispatch$1.apply(AsyncEventQueue.scala:87)

at org.apache.spark.scheduler.AsyncEventQueue$$anonfun$org$apache$spark$scheduler$AsyncEventQueue$$dispatch$1.apply(AsyncEventQueue.scala:87)

at scala.util.DynamicVariable.withValue(DynamicVariable.scala:58)

at <a href="http://org.apache.spark.scheduler.AsyncEventQueue.org">org.apache.spark.scheduler.AsyncEventQueue.org</a>$apache$spark$scheduler$AsyncEventQueue$$dispatch(AsyncEventQueue.scala:87)

at org.apache.spark.scheduler.AsyncEventQueue$$anon$1$$anonfun$run$1.apply$mcV$sp(AsyncEventQueue.scala:83)

at org.apache.spark.util.Utils$.tryOrStopSparkContext(Utils.scala:1302)

at org.apache.spark.scheduler.AsyncEventQueue$$anon$1.run(AsyncEventQueue.scala:82)

*Thread Reply:* Here is my code ...

```from pyspark.sql import SparkSession from pyspark.sql.functions import lit

spark = SparkSession.builder \ .master('local[1]') \ .config('spark.jars.packages', 'io.github.marquezproject:marquezspark:0.15.2') \ .config('spark.extraListeners', 'marquez.spark.agent.SparkListener') \ .config('openlineage.url', 'http://localhost:5000/api/v1/namespaces/spark_integration/') \ .config('openlineage.namespace', 'sparkintegration') \ .getOrCreate()

Supress success

spark.sparkContext.jsc.hadoopConfiguration().set('mapreduce.fileoutputcommitter.marksuccessfuljobs', 'false') spark.sparkContext.jsc.hadoopConfiguration().set('parquet.summary.metadata.level', 'NONE')

dfsourcetrip = spark.read \ .option('inferSchema', True) \ .option('header', True) \ .option('delimiter', '|') \ .csv('/Users/bcanal/Workspace/poc-marquez/pocspark/resources/data/source/trip.csv') \ .createOrReplaceTempView('sourcetrip')

dfdrivers = spark.table('sourcetrip') \ .select('driver') \ .distinct() \ .withColumn('drivername', lit('Bruno')) \ .withColumnRenamed('driver', 'driverid') \ .createOrReplaceTempView('source_driver')

df = spark.sql( """ SELECT d., t. FROM sourcetrip t, sourcedriver d WHERE t.driver = d.driver_id """ )

df.coalesce(1) \ .drop('driverid') \ .write.mode('overwrite') \ .option('path', '/Users/bcanal/Workspace/poc-marquez/pocspark/resources/data/target') \ .saveAsTable('trip')```

*Thread Reply:* After this execution, I can see just the source from first dataframe called dfsourcetrip...

*Thread Reply:* I was expecting to see all source dataframes, target dataframes and the job

*Thread Reply:* I`m running spark local on my laptop and I followed marquez getting start to up it

*Thread Reply:* I think there's a race condition that causes the context to be missing when the job finishes too quickly. If I just add

spark.sparkContext.setLogLevel('info')

to the setup code, everything works reliably. Also works if you remove the master('local[1]') - at least when running in a notebook

i need to implement export functionality for my data lineage project.

as part of this i need to convert the information fetched from graph db (neo4j) to CSV format and send in response.

can someone please direct me to the CSV format of open lineage data

*Thread Reply:* Hey, @anup agrawal. This is a great question! The OpenLineage spec is defined using the Json Schema format, and it’s mainly for the transport layer of OL events. In terms of how OL events are eventually stored, that’s determined by the backend consumer of the events. For example, Marquez stores the raw event in a lineage_events table, but that’s mainly for convenience and replayability of events . As for importing / exporting OL events from storage, as long as you can translate the CSV to an OL event, then HTTP backends like Marquez that support OL can consume them

*Thread Reply:* > as part of this i need to convert the information fetched from graph db (neo4j) to CSV format and send in response. Depending on the exported CSV, I would translate the CSV to an OL event, see https://github.com/OpenLineage/OpenLineage/blob/main/spec/OpenLineage.json

*Thread Reply:* When you say “send in response”, who would be the consumer of the lineage metadata exported for the graph db?

*Thread Reply:* so far what i understood about my requirement is that. 1. my service will receive OL events

*Thread Reply:* 2. store it in graph db (neo4j)

*Thread Reply:* 3. this lineage information will be displayed on ui, based on the request.

- now my part in that is to implement an Export functionality, so that someone can download it from UI. in UI there will be option to download the report.

- so i need to fetch data from storage and convert it into CSV format, send to UI

- they can download the report from UI.

SO my question here is that i have never seen how that CSV report look like and how do i achieve that ? when i had asked my team how should CSV look like they directed me to your website.

*Thread Reply:* I see. @Julien Le Dem might have some thoughts on how an OL event would be represented in different formats like CSV (but, of course, there’s also avro, parquet, etc). The Json Schema is the recommended format for importing / exporting lineage metadata. And, for a file, each line would be an OL event. But, given that CSV is a requirement, I’m not sure how that would be structured. Or at least, it’s something we haven’t previously discussed

i am very new to this .. sorry for any silly questions

*Thread Reply:* There are no silly questions! 😉

Hello, I have read every topic and listened to 4 talks and the podcast episode about OpenLineage and Marquez due to my basic understanding for the data engineering field, I have a couple of questions which I did not understand: 1- What are events and facets and what are their purpose? 2- Can I implement the OpenLineage API to any software? or does the software needs to be integrated with the OpenLineage API? 3- Can I say that OpenLineage is about observability and Marquez is about collecting and storing the metadata? Thank you all for being cooperative.

*Thread Reply:* Welcome, @Abdulmalik AN 👋 Hopefully the talks / podcasts have been informative! And, sure, happy to clarify a few things:

> What are events and facets and what are their purpose? An OpenLineage event is used to capture the lineage metadata at a point in time for a given run in execution. That is, the runs state transition, the inputs and outputs consumed/produced and the job associated with the run are part of the event. The metadata defined in the event can then be consumed by an HTTP backend (as well as other transport layers). Marquez is an HTTP backend implementation that consumes OL events via a REST API call. The OL core model only defines the metadata that should be captured in the context of a run, while the processing of the event is up to the backend implementation consuming the event (think consumer / producer model here). For Marquez, the end-to-end lineage metadata is stored for pipelines (composed of multiple jobs) with built-in metadata versioning support. Now, for the second part of your question: the OL core model is highly extensible via facets. A facet is user-defined metadata and enables entity enrichment. I’d recommend checking out the getting started guide for OL 🙂

> Can I implement the OpenLineage API to any software? or does the software needs to be integrated with the OpenLineage API? Do you mean HTTP vs other protocols? Currently, OL defines an API spec for HTTP backends, that Marquez has adopted to ingest OL events. But there are also plans to support Kafka and many others.

> Can I say that OpenLineage is about observability and Marquez is about collecting and storing the metadata? > Thank you all for being cooperative. Yep! OL defines the metadata to collect for running jobs / pipelines that can later be used for root cause analysis / troubleshooting failing jobs, while Marquez is a metadata service that implements the OL standard to both consume and store lineage metadata while also exposing a REST API to query dataset, job and run metadata.

Hi OpenLineage team! Has anyone got this working on databricks yet? I’ve been working on this for a few days and can’t get it to register lineage. I’ve attached my notebook in this thread.

silly question - does the jar file need be on the cluster? Which versions of spark does OpenLineage support?

*Thread Reply:* I based my code on this previous post https://openlineage.slack.com/archives/C01CK9T7HKR/p1624198123045800

*Thread Reply:* In your first cell, you have

from pyspark.sql import SparkSession

from pyspark.sql.functions import lit

spark.sparkContext.setLogLevel('info')

unfortunately, the reference to sparkContext in the third line forces the initialization of the SparkContext so that in the next cell, your new configuration is ignored. In pyspark, you must initialize your SparkSession before any references to the SparkContext. It works if you remove the setLogInfo call from the first cell and make your 2nd cell

spark = SparkSession.builder \

.config('spark.jars.packages', 'io.github.marquezproject:marquez_spark:0.15.2') \

.config('spark.extraListeners', 'marquez.spark.agent.SparkListener') \

.config('openlineage.url', '<https://domain.com>') \

.config('openlineage.namespace', 'my-namespace') \

.getOrCreate()

spark.sparkContext.setLogLevel('info')

How would one capture lineage for job that's processing streaming data? Is that in scope for OpenLineage?

*Thread Reply:* It’s absolutely in scope! We’ve primarily focused on the batch use case (ETL jobs, etc), but the OpenLineage standard supports both batch and streaming jobs. You can check out our roadmap here, where you’ll find Flink and Beam on our list of future integrations.

*Thread Reply:* Is there a streaming framework you’d like to see added to our roadmap?

*Thread Reply:* Welcome, @mohamed chorfa 👋 . Let’s us know if you have any questions!

*Thread Reply:* Really looking follow the evolution of the specification from RawData to the ML-Model

Hello OpenLineage community, We have been working on fleshing out the OpenLineage roadmap. See on github on the currently prioritized effort: https://github.com/OpenLineage/OpenLineage/projects Please add your feedback to the roadmap by either commenting on the github issues or opening new issues.

In particular, I have opened an issue to finalize our mission statement: https://github.com/OpenLineage/OpenLineage/issues/84

*Thread Reply:* Based on community feedback, The new proposed mission statement: “to enable the industry at-large to collect real-time lineage metadata consistently across complex ecosystems, creating a deeper understanding of how data is produced and used”

I have updated the proposal for the spec versioning: https://github.com/OpenLineage/OpenLineage/issues/63

Hi all. I'm trying to get my bearings on openlineage. Love the concept. In our data transformation pipelines, output datasets are explicitly versioned (we have an incrementing snapshot id). Our storage layer (deltalake) allows us to also ingest 'older' versions of the same dataset, etc. If I understand it correctly I would have to add some inputFacets and outputFacets to run to store the actual version being referenced. Is that something that is currently available, or on the roadmap, or is it something I could extend myself?

*Thread Reply:* It is on the roadmap and there’s a ticket open but nobody is working on it at the moment. You are very welcome to contribute a spec and implementation

*Thread Reply:* Please comment here and feel free to make a proposal: https://github.com/OpenLineage/OpenLineage/issues/35

TL;DR: our database supports time-travel, and runs can be set up to use a specific point-in-time of an input. How do we make sure to keep that information within openlineage

Hi, on a subject of spark integrations - I know that there is spark-marquez but was curious did you also consider https://github.com/AbsaOSS/spline-spark-agent ? It seems like this and spark-marquez are doing similar thing and maybe it would make sense to add openlineage support to spline spark agent?

*Thread Reply:* cc @Julien Le Dem @Maciej Obuchowski

*Thread Reply:* @Michael Collado

The OpenLineage Technical Steering Committee meetings are Monthly on the Second Wednesday 9:00am to 10:00am US Pacific and the link to join the meeting is https://us02web.zoom.us/j/81831865546?pwd=RTladlNpc0FTTDlFcWRkM2JyazM4Zz09 The next meeting is this Wednesday All are welcome. • Agenda: ◦ Finalize the OpenLineage Mission Statement ◦ Review OpenLineage 0.1 scope ◦ Roadmap ◦ Open discussion ◦ Slides: https://docs.google.com/presentation/d/1fD_TBUykuAbOqm51Idn7GeGqDnuhSd7f/edit#slide=id.ge4b57c6942_0_46 notes are posted here: https://wiki.lfaidata.foundation/display/OpenLineage/Monthly+TSC+meeting.,.,_

*Thread Reply:* Feel free to share your email with me if you want to be added to the gcal invite

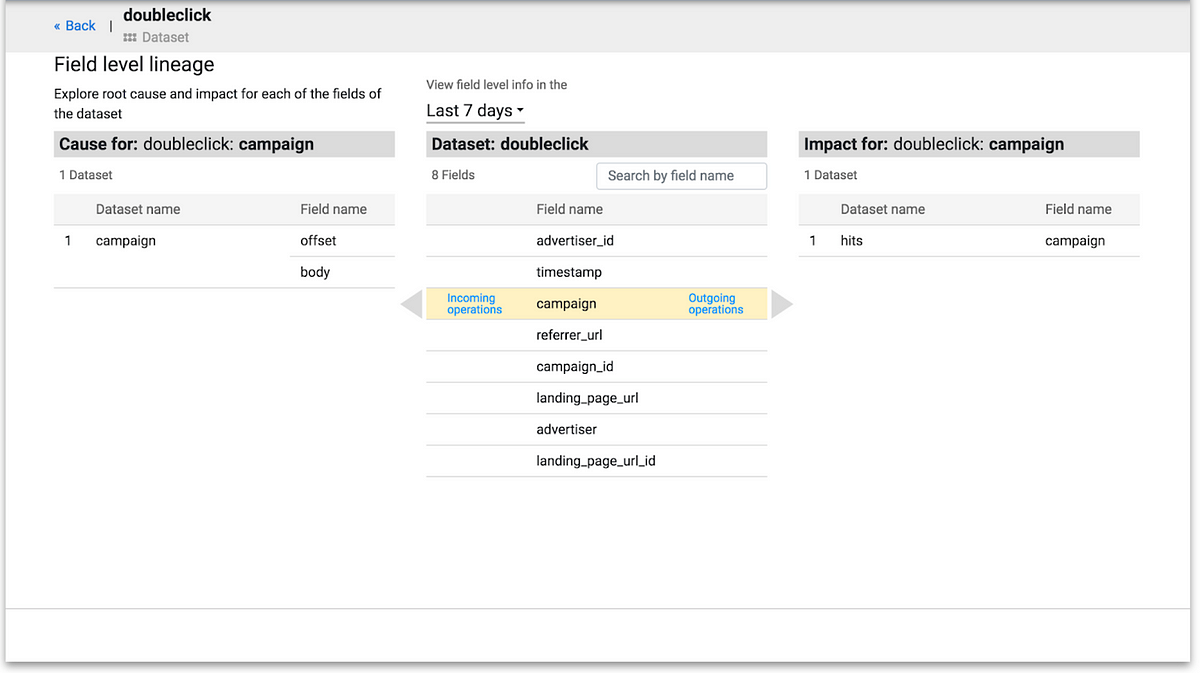

Hello, is it possible to track lineage on column level? For example for SQL like this:

CREATE TABLE T2 AS SELECT c1,c2 FROM T1;

I would like to record this lineage:

T1.C1 -- job1 --> T2.C1

T1.C2 -- job1 --> T2.C2

Would that be possible to record in OL format?

(the important thing for me is to be able to tell that T1.C1 has no effect on T2.C2)

I have updated the notes and added the link to the recording of the meeting this morning: https://wiki.lfaidata.foundation/display/OpenLineage/Monthly+TSC+meeting

*Thread Reply:* In particular, please review the versioning proposal: https://github.com/OpenLineage/OpenLineage/issues/63

*Thread Reply:* and the mission statement: https://github.com/OpenLineage/OpenLineage/issues/84

*Thread Reply:* for this one, please give explicit approval in the ticket

*Thread Reply:* @Zhamak Dehghani @Daniel Henneberger @Drew Banin @James Campbell @Ryan Blue @Maciej Obuchowski @Willy Lulciuc ^

*Thread Reply:* Per the votes in the github ticket, I have finalized the charter here: https://docs.google.com/document/d/11xo2cPtuYHmqRLnR-vt9ln4GToe0y60H/edit

Hi Everyone. I am PMC member and committer of Apache Airflow. Watched the talk at the summit https://airflowsummit.org/sessions/2021/data-lineage-with-apache-airflow-using-openlineage/ and thought I might help (after the Summit is gone 🙂 with making OpenLineage/Marquez more seemlesly integrated in Airflow

*Thread Reply:* The demo in this does not really use the openlineage spec does it?

Did I miss something - the API that was should for lineage was that of Marquez, how does Marquest use the open lineage spec?

*Thread Reply:* I have a question about the SQLJobFacet in the job schema - isn't it better to call it the TransformationJob Facet or the ProjecessJobFacet such that any logic in the appropriate language and be described? Am I misinterpreting the intention of SQLJobFacet is to capture the logic that runs for a job?

*Thread Reply:* > The demo in this does not really use the openlineage spec does it?

@Samia Rahman In our Airflow talk, the demo used the marquez-airflow lib that sends OpenLineage events to Marquez’s

*Thread Reply:* > Did I miss something - the API that was should for lineage was that of Marquez, how does Marquest use the open lineage spec?

Yes, Marquez ingests OpenLineage events that confirm to the spec via the

Hi all, does OpenLineage intend on creating lineage off of query logs?

From what I have read, there are a number of supported integrations but none that cater to regular SQL based ETL. Is this on the OpenLineage roadmap?

*Thread Reply:* I would say this is more of an ingestion pattern, then something the OpenLineage spec would support directly. Though I completely agree, query logs are a great source of lineage metadata with minimal effort. On our roadmap, we have Kafka as a supported backend which would enable streaming lineage metadata from query logs into a topic. That said, confluent has some great blog posts on Change Data Capture: • https://www.confluent.io/blog/no-more-silos-how-to-integrate-your-databases-with-apache-kafka-and-cdc/ • https://www.confluent.io/blog/simplest-useful-kafka-connect-data-pipeline-world-thereabouts-part-1/

*Thread Reply:* Q: @Kenton (swiple.io) Are you planning on using Kafka connect? If so, I see 2 reasonable options:

- Stream query logs to a topic using the JDBC source connector, then have a consumer read the query logs off the topic, parse the logs, then stream the result of the query parsing to another topic as an OpenLineage event

- Add direct support for OpenLineage to the JDBC connector or any other application you planned to use to read the query logs.

*Thread Reply:* Either way, I think this is a great question and a common ingestion pattern we should document or have best practices for. Also, more details on how you plan to ingestion the query logs would be help drive the discussion.

*Thread Reply:* Using something like sqlflow could be a good starting point? Demo https://sqlflow.gudusoft.com/?utm_source=gspsite&utm_medium=blog&utm_campaign=support_article#/

*Thread Reply:* @Kenton (swiple.io) I haven’t heard of sqlflow but it does look promising. It’s not on our current roadmap, but I think there is a need to have support for parsing query logs as OpenLineage events. Do you mind opening an issue and outlining you thoughts? It’d be great to start the discussion if you’d like to drive this feature and help prioritize this 💯

The openlineage implementation for airflow and spark code integration currently lives in Marquez repo, my understanding from the open lineage scope is that the the integration implementation is the scope of open lineage, are the spark code migrations going to be moved to open lineage?

@Samia Rahman Yes, that is the plan. For details you can see https://github.com/OpenLineage/OpenLineage/issues/73

I have a question about the SQLJobFacet in the job schema - isn't it better to call it the TransformationJob Facet or the ProjecessJobFacet such that any logic in the appropriate language and be described, can be scala or python code that runs in the job facet and processing streaming or batch data? Am I misinterpreting the intention of SQLJobFacet is to capture the logic that runs for a job?

*Thread Reply:* Hey, @Samia Rahman 👋. Yeah, great question! The SQLJobFacet is used only for SQL-based jobs. That is, it’s not intended to capture the code being executed, but rather the just the SQL if it’s present. The SQL fact can be used later for display purposes. For example, in Marquez, we use the SQLJobFacet to display the SQL executed by a given job to the user via the UI.

*Thread Reply:* To capture the logic of the job (meaning, the code being executed), the OpenLineage spec defines the SourceCodeLocationJobFacet that builds the link to source in version control

The process started a few months back when the LF AI & Data voted to accept OpenLineage as part of the foundation. It is now official, OpenLineage joined the LFAI & data Foundation. https://lfaidata.foundation/blog/2021/07/22/openlineage-joins-lf-ai-data-as-new-sandbox-project/

Hi, I am trying to create lineage between two datasets. Following the Spec, I can see the syntax for declaring the input and output datasets, and for all creating the associated Job (which I take to be the process in the middle joining the two datasets together). What I can't see is where in the specification to relate the job to the inputs and outputs. Do you have an example of this?

*Thread Reply:* The run event is always tied to exactly one job. It's up to the backend to store the relationship between the job and its inputs/outputs. E.g., in marquez, this is where we associate the input datasets with the job- https://github.com/MarquezProject/marquez/blob/main/api/src/main/java/marquez/db/OpenLineageDao.java#L132-L143

the OuputStatistics facet PR is updated based on your comments @Michael Collado https://github.com/OpenLineage/OpenLineage/pull/114

*Thread Reply:* /|~~~

///|

/////|

///////|

/////////|

\==========|===/

~~~~~~~~~~~~~~~~~~~~~

I have updated the DataQuality metrics proposal and the corresponding PR: https://github.com/OpenLineage/OpenLineage/issues/101 https://github.com/OpenLineage/OpenLineage/pull/115

Guys, I've merged circleCI publish snapshot PR

Snapshots can be found bellow: https://datakin.jfrog.io/artifactory/maven-public-libs-snapshot-local/io/openlineage/openlineage-java/0.0.1-SNAPSHOT/ openlineage-java-0.0.1-20210804.142910-6.jar https://datakin.jfrog.io/artifactory/maven-public-libs-snapshot-local/io/openlineage/openlineage-spark/0.1.0-SNAPSHOT/ openlineage-spark-0.1.0-20210804.143452-5.jar

Build on main passed (edited)

I added a mechanism to enforce spec versioning per: https://github.com/OpenLineage/OpenLineage/issues/63 https://github.com/OpenLineage/OpenLineage/pull/140

Hi all, at Booking.com we’re using Spline to extract granular lineage information from spark jobs to be able to trace lineage on column-level and the operations in between. We wrote a custom python parser to create graph-like structure that is sent into arangodb. But tbh, the process is far from stable and is not able to quickly answer questions like ‘which root input columns are used to construct column x’.

My impression with openlineage thus far is it’s focusing on less granular, table input-output information. Is anyone here trying to accomplish something similar on a column-level?

*Thread Reply:* Also interested in use case / implementation differences between Spline and OL. Watching this thread.

*Thread Reply:* It would be great to have the option to produce the spline lineage info as OpenLineage. To capture the column level lineage, you would want to add a ColumnLineage facet to the Output dataset facets. Which is something that is needed in the spec. Here is a proposal, please chime in: https://github.com/OpenLineage/OpenLineage/issues/148 Is this something you would be interested to do?

*Thread Reply:* regarding the difference of implementation, the OpenLineage spark integration focuses on extracting metadata and exposing it as a standard representation. (The OpenLineage LineageEvents described in the JSON-Schema spec). The goal is really to have a common language to express lineage and related metadata across everything. We’d be happy if Spline can produce or consume OpenLineage as well and be part of that ecosystem.

*Thread Reply:* Does anyone know if the Spline developers are in this slack group?

*Thread Reply:* @Luke Smith how have things progressed on your side the past year?

I have opened an issue to track the facet versioning discussion: https://github.com/OpenLineage/OpenLineage/issues/153

I have updated the agenda to the OpenLineage monthly TSC meeting: https://wiki.lfaidata.foundation/display/OpenLineage/Monthly+TSC+meeting (meeting information bellow for reference, you can also DM me your email to get added to a google calendar invite)

The OpenLineage Technical Steering Committee meetings are Monthly on the Second Wednesday 9:00am to 10:00am US Pacific and the link to join the meeting is https://us02web.zoom.us/j/81831865546?pwd=RTladlNpc0FTTDlFcWRkM2JyazM4Zz09 All are welcome.

Aug 11th 2021

• Agenda:

◦ Coming in OpenLineage 0.1

▪︎ OpenLineage spec versioning

▪︎ Clients

◦ Marquez integrations imported in OpenLineage

▪︎ Apache Airflow:

• BigQuery

• Postgres

• Snowflake

• Redshift

• Great Expectations

▪︎ Apache Spark

▪︎ dbt

◦ OpenLineage 0.2 scope discussion

▪︎ Facet versioning mechanism

▪︎ OpenLineage Proxy Backend (

*Thread Reply:* Just a reminder that this is in 2 hours

*Thread Reply:* I have added the notes to the meeting page: https://wiki.lfaidata.foundation/display/OpenLineage/Monthly+TSC+meeting

*Thread Reply:* The recording of the meeting is linked there: https://us02web.zoom.us/rec/share/2k4O-Rjmmd5TYXzT-pEQsbYXt6o4V6SnS6Vi7a27BPve9aoMmjm-bP8UzBBzsFzg.uY1je-PyT4qTgYLZ?startTime=1628697944000 • Passcode: =RBUj01C

Hi guys, great discussion today. Something we are particularly interested on is the integration with Airflow 2. I've been searching into Marquez and Openlineage repos and I couldn't find a clear answer on the status of that. I did some work locally to update the marquez-airflow package but I would like to know if someone else is working on this and maybe we could give it some help too.

*Thread Reply:* @Daniel Avancini I'm working on it. Some changes in airflow made current approach unfeasible, so slight change in a way how we capture events is needed. You can take a look at progress here: https://github.com/OpenLineage/OpenLineage/tree/airflow/2

*Thread Reply:* Thank you Maciej. I'll take a look

I have migrated the Marquez issues related to OpenLineage integrations to the OpenLineage repo

And OpenLineage 0.1.0 is out ! https://github.com/OpenLineage/OpenLineage/releases/tag/0.1.0

PR ready for review