Public Channels

- # boston-meetup

- # dagster-integration

- # data-council-meetup

- # dev-discuss

- # general

- # github-discussions

- # github-notifications

- # gx-integration

- # jobs

- # london-meetup

- # mark-grover

- # nyc-meetup

- # open-lineage-plus-bacalhau

- # providence-meetup

- # sf-meetup

- # spark-support-multiple-scala-versions

- # spec-compliance

- # toronto-meetup

- # user-generated-metadata

Private Channels

Direct Messages

Group Direct Messages

@Maciej Obuchowski has joined the channel

@Paweł Leszczyński has joined the channel

@Jakub Dardziński has joined the channel

@Michael Robinson has joined the channel

https://github.com/OpenLineage/OpenLineage/pull/2260 fun PR incoming

*Thread Reply:* hey look, more fun https://github.com/OpenLineage/OpenLineage/pull/2263

*Thread Reply:* nice to have fun with you Jakub

*Thread Reply:* Can't wait to see it on the 1st January.

*Thread Reply:* Ain’t no party like a dev ex improvement party

*Thread Reply:* Gentoo installation party is in similar category of fun

@Paweł Leszczyński approved PR #2661 with minor comments, I think the enum defined in the db layer is one comment we’ll need to address before merging; otherwise solid work dude 👌

_Minor_: We can consider defining a _run_state column and eventually dropping the event_type. That is, we can consider columns prefixed with _ to be "remappings" of OL properties to Marquez. -> didn't get this one. Is it for now or some future plans?

*Thread Reply:* I will then replace enum with string

also, what about this PR? https://github.com/MarquezProject/marquez/pull/2654

*Thread Reply:* this is the next to go

*Thread Reply:* and i consider it ready

*Thread Reply:* Then we have a draft one with streaming support https://github.com/MarquezProject/marquez/pull/2682/files -> which has an integration test of lineage endpoint working for streaming jobs

*Thread Reply:* I still need to work on #2682 but you can review #2654. once you get some sleep, of course 😉

Got the doc + poc for hook-level coverage: https://docs.google.com/document/d/1q0shiUxopASO8glgMqjDn89xigJnGrQuBMbcRdolUdk/edit?usp=sharing

*Thread Reply:* did you check if LineageCollector is instantiated once per process?

*Thread Reply:* Using it only via get_hook_lineage_collector

Anyone have thoughts about how to address the question about “pain points” here? https://openlineage.slack.com/archives/C01CK9T7HKR/p1700064564825909. (Listing pros is easy — it’s the cons we don’t have boilerplate for)

*Thread Reply:* Maybe something like “OL has many desirable integrations, including a best-in-class Spark integration, but it’s like any other open standard in that it requires contributions in order to approach total coverage. Thankfully, we have many active contributors, and integrations are being added or improved upon all the time.”

*Thread Reply:* Maybe rephrase pain points to "something we're not actively focusing on"

Apparently an admin can view a Slack archive at any time at this URL: https://openlineage.slack.com/services/export. Only public channels are available, though.

*Thread Reply:* you are now admin

have we discussed adding column level lineage support to Airflow? https://marquezproject.slack.com/archives/C01E8MQGJP7/p1700087438599279?thread_ts=1700084629.245949&cid=C01E8MQGJP7

*Thread Reply:* we have it in SQL operators

*Thread Reply:* OOh any docs / code? or if you’d like to respond in the MQZ slack 🙏

*Thread Reply:* I’ll reply there

Any opinions about a free task management alternative to the free version of Notion (10-person limit)? Looking at Trello for keeping track of talks.

*Thread Reply:* What about GitHub projects?

*Thread Reply:* Projects is the way to go, thanks

*Thread Reply:* Set up a Projects board. New projects are private by default. We could make it public. The one thing that’s missing that we could use is a built-in date field for alerting about upcoming deadlines…

worlds are colliding: 6point6 has been acquired by Accenture

*Thread Reply:* https://newsroom.accenture.com/news/2023/accenture-to-expand-government-transformation-capabilities-in-the-uk-with-acquisition-of-6point6

*Thread Reply:* We should sell OL to governments

*Thread Reply:* we may have to rebrand to ClosedLineage

*Thread Reply:* not in this way; just emit any event second time to secret NSA endpoint

*Thread Reply:* we would need to improve our stock photo game

CFP for Berlin Buzzwords went up: https://2024.berlinbuzzwords.de/call-for-papers/ Still over 3 months to submit 🙂

*Thread Reply:* thanks, updated the talks board

*Thread Reply:* https://github.com/orgs/OpenLineage/projects/4/views/1

*Thread Reply:* I'm in, will think what to talk about and appreciate any advice 🙂

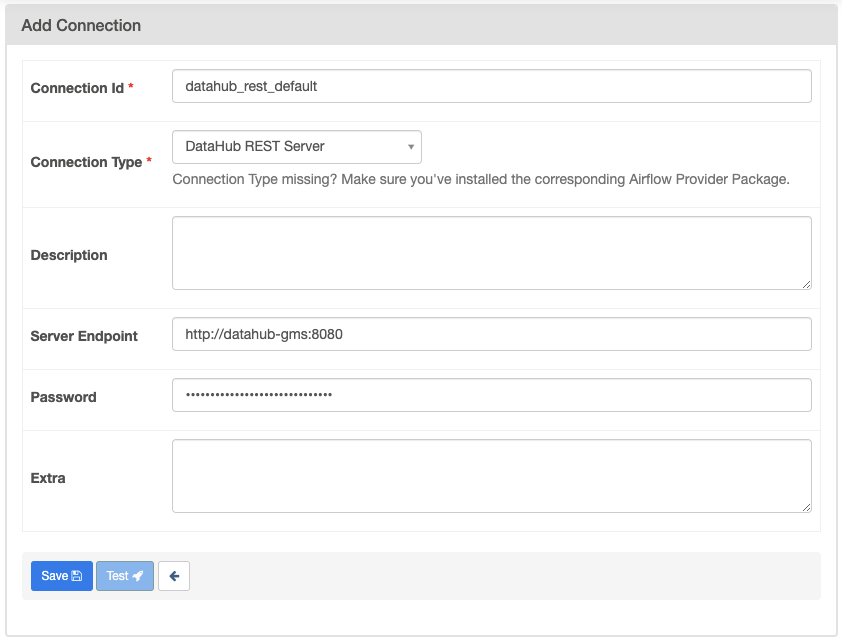

just searching for OpenLineage in the Datahub code base. They have an “interesting” approach? https://github.com/datahub-project/datahub/blob/2b0811b9875d7d7ea11fb01d0157a21fdd[…]odules/airflow-plugin/src/datahubairflowplugin/_extractors.py

*Thread Reply:* It looks like the datahub airflow plugin uses OL. but turns it off

https://github.com/datahub-project/datahub/blob/2b0811b9875d7d7ea11fb01d0157a21fdd67f020/docs/lineage/airflow.md

disable_openlineage_plugin true Disable the OpenLineage plugin to avoid duplicative processing.

They reuse the extractors but then “patch” the behavior.

*Thread Reply:* Of course this approach will need changing again with AF 2.7

*Thread Reply:* It looks like we can possibly learn from their approach in SQL parsing: https://datahubproject.io/docs/lineage/airflow/#automatic-lineage-extraction

*Thread Reply:* what's that approach? I only know they have been claiming best SQL parsing capabilities

*Thread Reply:* I haven’t looked in the details but I’m assuming it is in this repo. (my comment is entirely based on the claim here)

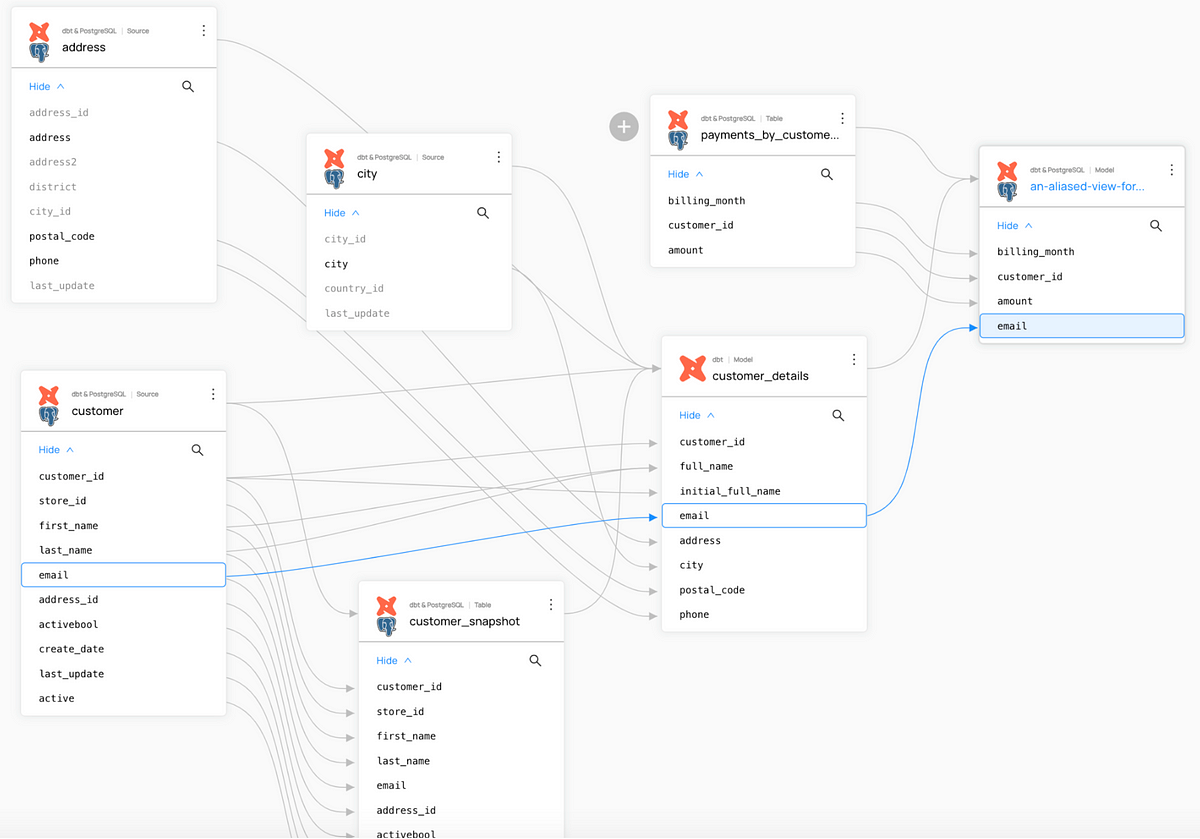

*Thread Reply:* <https://www.acryldata.io/blog/extracting-column-level-lineage-from-sql> -> The interesting difference is that in order to find table schemas, they use their data catalog to evaluate column-level lineage instead of doing this on the client side.

My understanding by example is: If you do

create table x as select ** from y

you need to resolve ** to know column level lineage. Our approach is to do that on the client side, probably with an extra call to database. Their approach is to do that based on the data catalog information.

I’m off on vacation. See you in a week

Maybe move today's meeting earlier, since no one from west coast is joining? @Harel Shein

*Thread Reply:* Ah! That would have been a good idea, but I can’t :(

*Thread Reply:* Do you prefer an earlier meeting tomorrow?

*Thread Reply:* maybe let's keep today's meeting then

The full project history is now available at https://openlineage.github.io/slack-archives/. Check it out!

*Thread Reply:* tfw you thought the scrollback was gone 😳

*Thread Reply:* slack has a good activation story, I wonder how much longer they can keep this up for

*Thread Reply:* always nice to be reminded that there are no actual incremental costs on their end

*Thread Reply:* I guess it’s the difference between storing your data in memory vs. on a glacier 🧊

*Thread Reply:* ah yes surely there is some tiering going on

*Thread Reply:* i might get to marquez slack/PRs today, but most likely tmr morning

*Thread Reply:* If you’re looking for priorities, it would be really great if you could give feedback on one of @Paweł Leszczyński streaming support PRs today

*Thread Reply:* ok, I’ll get to the streaming PR first

*Thread Reply:* FYI, the namespace filtering is a good idea, just needs some feedback on impl / naming

Jens would like to know if there’s anything we want included in the welcome portion of the slide deck. Suggestions? (Aside from the usual links)

@Paweł Leszczyński I reviewed your PR today (mainly the logic on versioning for streaming jobs); here is the main versioning limitations for jobs: a new JobVersion is created only when a job run completes or fails (or is in the done state); that is, we don’t know if we have received all the input/output datasets so we hold off on creating a new job version until we do.

For streaming, we’ll need to create a job version on start. Do we assume we have all input/output datasets associated with the streaming job? Does OpenLineage guarantee this to be the case for streaming jobs? Having versioning logic for batch vs streaming is a reasonable solution, just want to clarify

*Thread Reply:* yes, the logic adds distinction on how to create job version per processing type. For streaming, I think it makes more sense to create it at the beginning. Then, within other events of the same run, we need to check if the version has changed, and create new version in that case

*Thread Reply:* would we want to use the same versioning func Utils.newJobVersionFor() for streaming? That is, should we assume the input/output datasets contained within the OL event be the “current” set for the streaming job?

*Thread Reply:* that is,

2 input streams, 1 output stream (version 1)

then, 1 input streams, 2 output stream (version 2)

...

*Thread Reply:* but what about the case when the in/out streams are not present:

1 input streams, 2 output stream (version 2)

then, 1 input streams, 0 output stream (version 3)

...

*Thread Reply:* The meaning for the streaming events should be slightly different.

For batch, input and output datasets are cumulative from all the events. If we have an event with output datasets A + B, then another event with output datasets B + C, then we assume job has output datasets A + B + C.

For streaming, we may have a streaming job than for a week was reading data from topics A + B, and then in the next week it was reading from B + C. I think this should the mimicked in different job versions. Making it cumulative for jobs that run for several weeks does not make that much sense to me. The problem here is: what happens if a producer some extra events with no input/output datasets specified, like amount of bytes read? Shall we treat it as a new version? If not, why not?

This part is missing in PR and our Flink integration always sends all the input & datasets. I can add extra logic that will prevent creating new job version if event has no input nor output datasets. However, I can't see any clean and generic solution to this.

*Thread Reply:* > The problem here is: what happens if a producer some extra events with no input/output datasets specified, like amount of bytes read? Shall we treat it as a new version? If not, why not?

We can view the bytes read as additional metadata about the jobs inputs/outputs that wouldn’t trigger a new version (for the job or dataset). I would associate the bytes with the current dataset version and sum them up (I’ve read X bytes from dataset version D); you can also view tags in a similar way. In our current versioning logic for datasets, we create a new dataset version when a job completes, I think we’ll want to do something similar for streaming jobs; that is, when X bytes are written to a given dataset that would trigger a new version

*Thread Reply:* > I can add extra logic that will prevent creating new job version if event has no input nor output datasets

Yes, if in/out no datasets are present, then I wouldn’t create a new job version. @Julien Le Dem opened an issue a while back about this https://github.com/MarquezProject/marquez/issues/1513. that is, there’s a difference between an empty set [ ] and null

*Thread Reply:* > This part is missing in PR and our Flink integration always sends all the input & datasets This is very important to note in the code andor API docs

*Thread Reply:* Sure we should. Just wanted to make sure if this the way we want to go.

*Thread Reply:* @Willy Lulciuc did you had a chance to look at this as well https://github.com/MarquezProject/marquez/pull/2654 ? This should be merged before streaming support I believe.

*Thread Reply:* ahh sorry, I hadn’t realized they were related / dependent on one another. sure I’ll give the PR a pass

*Thread Reply:* I looked into your comments and found them, as always, really useful. I introduced changes based on most of them. Please take a look at my reponses within Job model class. I think there is one issue we still need to discuss.

What to do with existing type field? I would opt for deprecating it as within the introduced job facet, a notion of jobType stands for QUERY|COMMAND|DAG|TASK|JOB|MODEL , while processingType determines if a job is batch or streaming.

One solution I see is deprecating type and introducing JobLabels class as property within Job with fields like jobType, processingType , integration

Another would be to send processingType within existing type field. This would mimic existing API, but require further work. The disadvantage is that we still have mismatch between job type in marquez and openlineage spec.

I would opt for (2), but (1) works for me as well.

I’m working on a redesign of the Ecosystem page for a more modern, user-friendly layout. It’s a work in progress, and feedback is welcome: https://github.com/OpenLineage/docs/pull/258.

Can someone count the folks in the room please? Can’t see anyone other than the speaker

@Michael Robinson can you hear the questions?

*Thread Reply:* I could hear all but one of the questions after the first talk

*Thread Reply:* Oh then it's better than I thought

I just had a lovely conversation at reinvent with the CTO of dbt, Connor, and didn’t even know it was him until the end 🤯

Congrats on a great event!

*Thread Reply:* Yeah it was pretty nice 🙂 A lot of good discussions with Google people. Also Jarek Potiuk was there

*Thread Reply:* I think it won't be the last one Warsaw OpenLineage meetup

https://openlineage.slack.com/archives/C01CK9T7HKR/p1701288000527449 putting it here. I don’t feel like I’m the best person to answer but I feel like operational lineage which we’re trying to provide is the thing

created a project for Blog post ideas: https://github.com/orgs/OpenLineage/projects/5/views/1

Release update: we’re due for an OpenLineage release and overdue for a Marquez release. As tomorrow, the first, is a Friday, we should wait until Monday at the earliest. I’m planning to open a vote for an OL release then, but Marquez is red so I’m holding off on a Marquez release for the time being.

*Thread Reply:* I can address the red CI status, it’s bc we’re seeing issues publishing our snaphots

*Thread Reply:* I think we should release Marquez on Mon. as well

*Thread Reply:* I want to get this https://github.com/OpenLineage/OpenLineage/pull/2284 into OL release

would be interesting if we can use this comparison as a learning (improve docs, etc): https://blog.singleorigin.tech/race-to-the-finish-line-age/

or rather, use the format for comparing OL with other tools 😉

*Thread Reply:* It would be nice to have something like this (I would want it to be a little more even-handed, though). It will be interesting to see if they will ever update this now that there’s automated lineage from Airflow supported by OL

Review needed of the newsletter section on Airflow Provider progress @Jakub Dardziński @Maciej Obuchowski when you have a moment. It will ship by 5 PM ET today, fyi. Already shared it with you. Thanks!

*Thread Reply:* Thanks @Jakub Dardziński

They finally uploaded the OpenLineage Airflow Summit videos to the Airflow channel on YT: https://www.youtube.com/@ApacheAirflow/videos

On Monday I’m meeting with someone at Confluent about organizing a meetup in London in January. I’m thinking I’ll suggest Jan. 24 or 31 as mid-week days work better and folks need time to come back from the vacation. If you have thoughts on this, would you please let me know by 10:00 am ET on Monday? Also, standup will be happening before the meeting — perhaps we can discuss it then. @Harel Shein

*Thread Reply:* Confluent says January 31st will work for them for a London meetup, and they’ll be providing a speaker as well. Is it safe to firm this up with them?

*Thread Reply:* I'd say yes, eventually if Maciej doesn't get new passport till this time I can speak

*Thread Reply:* I already got the photos 😂

*Thread Reply:* you gotta share them

*Thread Reply:* Also apparently it's possible to get temporary passport at airport in 15 minutes

*Thread Reply:* How civilized...

*Thread Reply:* you can get it in the Warsaw airport just like last-minute passport, costs barely nothing (30 PLN which is ~7/8 USD)

*Thread Reply:* yeah, many people are surprised how developed our public service may be

*Thread Reply:* tbh it's always random, can be good can be shit 🙂

*Thread Reply:* lately it's definitely been better than 10 years ago tho

https://blog.datahubproject.io/extracting-column-level-lineage-from-sql-779b8ce17567 https://datastation.multiprocess.io/blog/2022-04-11-sql-parsers.html

*Thread Reply:* [6] Note that this isn’t a fully fair comparison, since the DataHub one had access to the underlying schemas whereas the other parsers don’t accept that information. 🙂

*Thread Reply:* I’m not sure about the methodology, but these numbers are pretty significant

*Thread Reply:* We tested on a corpus of ~7000 BigQuery SELECT statements and ~2000 CREATE TABLE ... AS SELECT (CTAS) statements.⁶

*Thread Reply:* More doctors smoke camels than any other cigarette 😉 If you test on BigQuery, you will not get comparable results for SnowFlake for example.

Wondering if we can do anything about this. We could write a blog post on lineage extraction from Snowflake SQL queries. This is something we spent time on and possibly we support dialect specific queries that others don't.

*Thread Reply:* it all comes to the question whether we should start publishing comparisons

*Thread Reply:* We can also accept schema information in our sql lineage parser. Actually, this would have been good idea I believe.

*Thread Reply:* for select ** use-case?

Release vote is here when you get a moment: https://openlineage.slack.com/archives/C01CK9T7HKR/p1701722066253149

Should we disable openlineage-airflow on Airflow 2.8 to force people to use provider?

*Thread Reply:* it sounds like maybe something about this should be included in the 2.8 docs. The dev rel team is talking about the marketing around 2.8 right now…

*Thread Reply:* also, the release will evidently be out next Thursday

*Thread Reply:* I mean, openlineage-airflow is not part of Airflow

*Thread Reply:* We'd have provider for 2.8

*Thread Reply:* so maybe the airflow newsletter would be better

*Thread Reply:* is there anything about the provider that should be in the 2.8 marketing?

*Thread Reply:* I don't think so

*Thread Reply:* Kenten wants to mention that it will be turned off in the 2.8 docs, so please lmk if anything about this changes

https://github.com/OpenLineage/docs/pull/263

Changelog PR for 1.6.0: https://github.com/OpenLineage/OpenLineage/pull/2298

*Thread Reply:* that’s weird ruff-lint found issues, especially when it has ruff version pinned

*Thread Reply:* CHANGELOG.md:10: acccording ==> according

this change is accurate though 🙂

*Thread Reply:* I tried to sneak in a fix in dev but the linter didn’t like it so I changed it back. All set now

*Thread Reply:* The release is in progress

*Thread Reply:* ah, gotcha

dev/get_changes.py:49:17: E722 Do not use bare `except`

dev/get_changes.py:49:17: S112 `try`-`except`-`continue` detected, consider logging the exception

for next time just add except Exception: instead of except: 🙂

*Thread Reply:* GTK, thank you

The release-integration-flink job failed with this error message:

Execution failed for task ':examples:stateful:compileJava'.

> Could not resolve all files for configuration ':examples:stateful:compileClasspath'.

> Could not find io.**********************:**********************_java:1.6.0-SNAPSHOT.

Required by:

project :examples:stateful

*Thread Reply:* No cache is found for key: v1-release-client-java--rOhZzScpK7x+jzwfqkQVwOVgqXO91M7VEEtzYHNvSmY=

Found a cache from build 155811 at v1-release-client-java-

is this standard behaviour?

*Thread Reply:* well, same happened for 1.5.0 and it worked

*Thread Reply:* we gotta wait for Maciej/Pawel :<

*Thread Reply:* Looks like Gradle version got bumped and gives some problems

*Thread Reply:* Think we can release by midday tomorrow?

*Thread Reply:* oh forgot about this totally

Feedback sought on a redesign of the ecosystem page that (hopefully) freshens and modernizes the page: https://github.com/OpenLineage/docs/pull/258

Changelog PR for 1.6.1: https://github.com/OpenLineage/OpenLineage/pull/2301

*Thread Reply:* @Maciej Obuchowski the flink job failed again

*Thread Reply:* well, at least it's a different error

*Thread Reply:* one more try? https://github.com/OpenLineage/OpenLineage/pull/2302 @Michael Robinson

*Thread Reply:* 1.6.2 changelog PR: https://github.com/OpenLineage/OpenLineage/pull/2304

*Thread Reply:* @Maciej Obuchowski 👆

*Thread Reply:* going out for a few hours, so next try would be tomorrow if it fails again...

*Thread Reply:* Thanks, Maciej. That worked, and 1.6.2 is out.

Starting a thread for collaboration on the community meeting next week

*Thread Reply:* Releases: 1.6.2

*Thread Reply:* 2023 recap/“best-of”?

*Thread Reply:* @Harel Shein any thoughts? Also, does anyone know if Julien will be back from vacation?

*Thread Reply:* We should probably try to something with Google proposal

*Thread Reply:* Not sure if it needs additional discussion, maybe just implementation?

*Thread Reply:* I can ask him, but it would probably be good if you could facilitate next week @Michael Robinson?

*Thread Reply:* I agree that we need to address those Google proposals, we should ask Jens if he’s up for presenting and discussing them first?

*Thread Reply:* maybe Pawel wants to present progress with https://github.com/OpenLineage/OpenLineage/issues/2162?

*Thread Reply:* Still waiting on a response from Jens

*Thread Reply:* I think Jens does not have a lot of time now

*Thread Reply:* Emailed him in case he didn’t see the message

*Thread Reply:* Jens confirmed

*Thread Reply:* He will have to join about 15 minutes late

*Thread Reply:* would love to come but I'm at friend's birthday at that time 😐

*Thread Reply:* I’d love to as well, but have diner plans 😕

*Thread Reply:* count me in if not too late

@Paweł Leszczyński mind giving this PR a quick look? https://github.com/MarquezProject/marquez/pull/2700 … it’s a dep on https://github.com/MarquezProject/marquez/pull/2698

*Thread Reply:* thanks @Paweł Leszczyński for the +1 ❤️

@Jakub Dardziński: In Marquez, metrics are exposed via the endpoint /metrics using prometheus (most of the custom metrics defined are here). Oddly enough, prometheus roadmap states that they have yet to adopt OpenMetrics! But, you can backfill the metrics into prometheus. So, knowing this, I would move to using metrics core by Dropwizard and us an exporter to export metrics to datadog using metrics-datadog. The one major benefit here is that we can define a framework around defining custom metrics internally within Marquez using core Dropwizard libraries, and then enable the reporter via configuration to emit metrics in marquez.yml : For example:

`metrics:

frequency: 1 minute # Default is 1 second.

reporters:

- type: datadog

.

.

*Thread Reply:* I tested this actually and it works the only thing is traces, I found it very poor to just have metrics around function name

*Thread Reply:* I totally agree, although I feel metrics and tracing are two separate things here

*Thread Reply:* I really appreciate your help and advice! 🙂

*Thread Reply:* Of course, happy to chime in here

*Thread Reply:* I’m just happy this is getting some much needed love 😄

*Thread Reply:* Also, it seems like datadog uses OpenTelemetry: > Datadog Distributed Tracing allows you easily ingest traces via the Datadog libraries and agent or via OpenTelemetry And looks like OpenTelemetry has support for Dropwizard

*Thread Reply:* yep, that's why I liked otel idea

*Thread Reply:* Also, here are the docs for DD + OpenTelemetry … so enabling OpenTelemetry in Marquez would be doable

*Thread Reply:* and we can make all of the configurable via marquez.yml

*Thread Reply:* hit me up with any questions! (just know, there will be a delay)

*Thread Reply:* > and we can make all of the configurable via marquez.yml

it ain’t that easy - we would need to build extended jar with OTEL agent which I think is way too much work compared to benefits. you can still configure via env vars or system properties

I’ve been looking into partitioning for psql, think there’s potential here for huge perf gains. Anyone have experience?

*Thread Reply:* partition ranges will give a boost by default

*Thread Reply:* Which tables do you want to partition? Event ones?

*Thread Reply:* • runs

• job_versions

• dataset_versions

• lineage_events

• and all the facets tables

@Paweł Leszczyński • PR 2682 approved with minor comments on stream versioning logic / suggestions ✅ • PR 2654 approved with minor comment (we’ll want to do a follow up analysis on the query perf improvements) ✅

*Thread Reply:* Thanks @Willy Lulciuc. I applied all the recent comments and merged 2654.

There is one discussion left in 2682, which I would like to resolve before merging. I added extra comment on the implemented approach and I am open to get to know if this is the approach we can go with.

@Julien Le Dem @Maciej Obuchowski discussion is about when to create a new job version for a streaming job. No deep dive in the code is required to take part in it. https://github.com/MarquezProject/marquez/pull/2682#discussion_r1425108745

*Thread Reply:* awesome, left my final thoughts 👍

Maybe we should clarify the documentation on adding custom facets at the integration level? Wdyt? https://openlineage.slack.com/archives/C01CK9T7HKR/p1702446541936589?threadts=1702033180.635339&channel=C01CK9T7HKR&messagets=1702446541.936589|https://openlineage.slack.com/archives/C01CK9T7HKR/p1702446541936589?threadts=1702033180.635339&channel=C01CK9T7HKR&messagets=1702446541.936589

Hey, i think it would help some people using Airflow integration (with Airflow 2.6) if we release a patch version of OL package with this PR included #2305. I am not sure what is the release cycle here, but maybe there is already an ETA on next patch release? If so, please let me know 🙂 Thanks !

*Thread Reply:* you gotta ask for the release in #general, 3 votes from committers approve immediate release 🙂

*Thread Reply:* @Michael Robinson 3 votes are in 🙂

*Thread Reply:* https://openlineage.slack.com/archives/C01CK9T7HKR/p1702474416084989

*Thread Reply:* Thanks for the ping. I replied in #general and will initiate the release as soon as possible.

seems that we don’t output the correct namespace as in the naming doc for Kafka. we output the kafka server/broker URL as namespace (in the Flink integration specifically) https://github.com/OpenLineage/OpenLineage/blob/main/spec/Naming.md#kafka

*Thread Reply:* @Paweł Leszczyński, would you be able to add the Kafka: prefix to the Kafka visitors in the flink integration tomorrow?

*Thread Reply:* I am happy to do this. Just to make, sure: docs is correct, flink implementation is missing kafka:// prefix, right?

*Thread Reply:* Thanks @Paweł Leszczyński. made a couple of suggestions, but we can def merge without

*Thread Reply:* would love to discuss this first. If a user stores an iceberg table in S3, then should it conform S3 naming or iceberg naming?

it's S3 location which defines a dataset. iceberg is a format for accessing data but not identifier as such.

*Thread Reply:* No rush, just something we noticed and that some people in the community are implementing their own patch for it.

my next year goal is to have programmatic way of using naming convention

*Thread Reply:* nope but would be worth reaching out to them to see how we could collaborate? they’re part of the LFAI (sandbox): https://github.com/bitol-io/open-data-contract-standard

*Thread Reply:* background https://medium.com/profitoptics/data-contract-101-568a9adbf9a9

*Thread Reply:* We should still have a conversation:)

If you want to join the conversation on Ray.io integration: https://join.slack.com/t/ray-distributed/shared_invite/zt-2635sz8uo-VW076XU6bKMEiFPCJWr65Q

*Thread Reply:* is there any specific channel/conversation?

*Thread Reply:* Yeah, but it’s private. Added you. For everyone else, Ping me on slack when you join and I’ll add you.

A vote to release Marquez 0.43.0 is open. We need one more: https://marquezproject.slack.com/archives/C01E8MQGJP7/p1702657403267769

*Thread Reply:* the changelog PR is RFR

AWS is making moves! https://github.com/aws-samples/aws-mwaa-openlineage

*Thread Reply:* the repo itself is pretty old, last updated 2mo ago and used OL package not provider (1.4.1)

*Thread Reply:* still it's nice they're doing this :)

*Thread Reply:* since they’re using MWAA they won’t be affected by turn-off with coming with Airflow 2.8 for a while. Otherwise that would be a good excuse to get in touch with them

*Thread Reply:* I think this repo was related to the blog which was authored a while back - https://aws.amazon.com/blogs/big-data/automate-data-lineage-on-amazon-mwaa-with-openlineage/ No other moves from our end so far, at least MWAA team : )

*Thread Reply:* Hi All, I am one of the owners of this repo and working to update this to work with MWAA 2.8.1, with apache-airflow-providers-openlineage==1.4.0. I am facing an issue with my set-up. I am using Redshift SQL as a sample use-case for this and getting an error relating to the Default Extractor. Haven't really looked at this at much detail yet but wondering if you have thoughts? I just updated the env variables to use: AIRFLOWOPENLINEAGETRANSPORT and AIRFLOWOPENLINEAGENAMESPACE and changed operator from PostgresOperator to SQLExecuteQueryOperator.

[2024-03-07 03:52:55,496] Failed to extract metadata using found extractor <airflow.providers.openlineage.extractors.base.DefaultExtractor object at 0x7fc4aa1e3950> - section/key [openlineage/disabled_for_operators] not found in config task_type=SQLExecuteQueryOperator airflow_dag_id=rs_source_to_staging task_id=task_insert_event_data airflow_run_id=manual__2024-03-07T03:52:11.634313+00:00

[2024-03-07 03:52:55,498] section/key [openlineage/config_path] not found in config

[2024-03-07 03:52:55,498] section/key [openlineage/config_path] not found in config

[2024-03-07 03:52:55,499] Executing:

insert into event

SELECT eventid, venueid, catid, dateid, eventname, starttime::TIMESTAMP

FROM s3_datalake.event;

*Thread Reply:* @Paul Wilson Villena It looks like a small mistake in the OL, that I'll fix in the next version - we missed adding a callback there, and getting the airflow configuration raises error when disabled_for_operators is not defined in the airflow.cfg file / the env variable. For now it should help to simply add the <a href="https://airflow.apache.org/docs/apache-airflow-providers-openlineage/1.4.0/configurations-ref.html#id1">[openlineage]</a> section to airflow.cfg, and set disabled_for_operators="" , or just export AIRFLOW__OPENLINEAGE__DISABLED_FOR_OPERATORS="" ,

*Thread Reply:* Will be released in the next provider version: https://github.com/apache/airflow/pull/37994

*Thread Reply:* Hi @Kacper Muda it seems I need to also set this: Otherwise this error persists:

section/key [openlineage/config_path] not found in config

os.environ["AIRFLOW__OPENLINEAGE__CONFIG_PATH"]=""

*Thread Reply:* Yes, sorry for missing that. I fixed in the code and forgot to mention it. If You were to not use AIRFLOW__OPENLINEAGE__TRANSPORT You'd have to set it to empty string as well, as it's missing the same fallback 🙂

*Thread Reply:* @Paul Wilson Villena FYI, apache-airflow-providers-openlineage==1.7.0 has just been released, containing the fix to that problem 🙂

The release is finished. Slack post, etc., coming soon

have we thought of making the SQL parser pluggable?

*Thread Reply:* what do you mean by that?

*Thread Reply:* (this is coming from apple) like what if a user wanted to provide their own parse for SQL in place of the one shipped with our integrations

*Thread Reply:* for example, if/when we integrate with DataHub, can they use their parse instead of one provided

*Thread Reply:* that would be difficult, we would need strong motivation for that 🫥

This question fits with what we said we would try to document more, can someone help them out with it this week? https://openlineage.slack.com/archives/C063PLL312R/p1702683569726449

Airflow 2.8 has been released. Are we still “turning off” the external Airflow integration with this one? What do Airflow users need to know to avoid unpleasant surprises? Kenten is open to including a note in the 2.8 blog post.

*Thread Reply:* As a newcomer here, I believe it would be wise to avoid supporting Airflow 2.8+ in the openlineage-airflow package. This approach would encourage users to transition to the provider package. It's important to clearly communicate that ongoing development and enhancements will be focused on the apache-airflow-providers-openlineage package, while the openlineage-airflow will primarily be updated for bug fixes. I'll look into whether this strategy is already noted in the documentation. If not, I will propose a documentation update.

*Thread Reply:* https://github.com/OpenLineage/OpenLineage/pull/2330 Please let me know if some changes are required, i was not sure how to properly implement it.

This looks cool, might be useful for us? https://github.com/aklivity/zilla

*Thread Reply:* the license is a bit weird, but should be ok for us. it’s apache, unless you directly compete with the company that built it.

*Thread Reply:* tbh not sure how

*Thread Reply:* I think we should be focused on 1) being compatible with most popular solutions (kafka...) 2) being easy to integrate with (custom transports)

rather than forcing our opinionated way on how OpenLineage events should flow in customer architecture

Apologies for having to miss today’s committer sync — I’ll be picking up my daughter from school

WDYT about starting to add integration specific channels and adding a little welcome bot for people when they join?

- spark-openlineage-dev

- spark-openlineage-users

- airflow-openlineage-dev

- airflow-openlineage-users

- spark-openlineage-dev

- flink-openlineage-users etc…

*Thread Reply:* the -dev and -users seems like overkill, but also understand that we may want to split user questions from development

*Thread Reply:* maybe just shorten to spark-integration , flink-integration , etc. Or integrations-spark etc

*Thread Reply:* we probably should consider a development and welcomechannel

*Thread Reply:* yeah.. makes sense to me. let’s leave this thread open for a few days so more people can chime in and then I’ll make a proposal based on that.

*Thread Reply:* makes sense to me

*Thread Reply:* I think there is not enough discussion for it to make sense

*Thread Reply:* and empty channels do not invite to discussion

*Thread Reply:* Maybe worth it for spark questions alone? And then for equal coverage we need the others. It’s getting easy to overlook questions in general due to the increased volume and long code snippets, IMO.

*Thread Reply:* yeah I think the volume is still quite low

*Thread Reply:* something like Airflow's #troubleshooting channel easily has order of magnitude more messages

*Thread Reply:* and even then, I'd split between something like #troubleshooting and #development rather than between integrations

*Thread Reply:* not only because it's too granular, but also there's a development that isn't strictly integration related or touches multiple ones

Link to the vote to release the hot fix in Marquez: https://marquezproject.slack.com/archives/C01E8MQGJP7/p1703101476368589

For the newsletter this time around, I’m thinking that a year-end review issue might be nice in mid-January when folks are back from vacation. And then a “double issue” at the end of January with the usual updates. We’ve still got a rather, um, “select” readership, so the stakes are low. If you have an opinion, please lmk.

*Thread Reply:* I’m for mid-January option

1.7.0 changelog PR needs a review: https://github.com/OpenLineage/OpenLineage/pull/2331

Notice for the release notes (WDYT?):

COMPATIBILITY NOTICE

Starting in 1.7.0, the Airflow integration will no longer support Airflow versions >=2.8.0.

Please use the OpenLineage Airflow Provider instead.

It includes a link to here: https://airflow.apache.org/docs/apache-airflow-providers-openlineage/stable/index.html

https://eu.communityovercode.org/ is that proper conference to talk about OL?

*Thread Reply:* Yes! At least to a more broader audience

Happy new year all!

Am I the only one that sees Free trial in progress here in OL slack?

*Thread Reply:* same for me, I think Slack initiated that

*Thread Reply:* we’re on the Slack Pro trial (we were on the free plan before)

*Thread Reply:* I think Slack initiated it

https://github.com/OpenLineage/OpenLineage/issues/2349 - this issue is really interesting. I am hoping to see follow-up from David.

So who wants to speak at our meetup with Confluent in London on Jan. 31st?

*Thread Reply:* do we have sponsorship to fly over?

*Thread Reply:* Not currently but I can look into it

*Thread Reply:* do we have other active community members based in the UK?

*Thread Reply:* I’ll ask around

*Thread Reply:* Abdallah Terrab at Decathlon has volunteered

*Thread Reply:* does it mean we're still looking for someone?

*Thread Reply:* I already told I'll go last month, but not sure if it's still needed

*Thread Reply:* and does Confluent have a talk there?

*Thread Reply:* Sorry about that, Maciej. I’ll ask Viraj if Astronomer would cover your ticket. There will be a Confluent speaker.

*Thread Reply:* if we need to choose between kafka summit and a meetup - I think we should go for kafka summit 🙂

*Thread Reply:* I think so too

*Thread Reply:* Viraj has requested approval for summit, and we can expect to hear from finance soon

*Thread Reply:* Also, question from him about the talk: what does “streaming” refer to in the title — Kafka only?

*Thread Reply:* Kafka, Spark, Flink

*Thread Reply:* If someone wants to do a joint talk let me know 😉

*Thread Reply:* @Willy Lulciuc will you be in UK then?

*Thread Reply:* I can be, if astronomer approves? but also realizing it’s Jan 31st, so a bit tight

*Thread Reply:* yeah, that sounds… unlikely 🙂

*Thread Reply:* also thinking about all the traveling ive been doing recently and things I need to work on. would be great to have some focus time

did anyone submit a talk to https://www.databricks.com/dataaisummit/call-for-presentations/?

*Thread Reply:* tagging @Julien Le Dem on this one. since the deadline is tomorrow.

*Thread Reply:* I don’t have my computer with me. ⛷️

*Thread Reply:* Does @Willy Lulciuc want to submit? Happy to be co-speaker (if you want. But not necessary)

*Thread Reply:* Willy is also on vacation, I’m happy to submit for the both of us. I’ll try to get something out today

*Thread Reply:* would be great to have someting on Databricks conference 🙂

*Thread Reply:* what I’m currently thinking. learnings from openlineage adoption in Airflow and Flink, and what can be learned / applied on Spark lineage.

This month’s TSC meeting is next Thursday. Anyone have any items to add to the agenda?

*Thread Reply:* @Kacper Muda would you want to talk about doc changes in Airflow provider maybe?

*Thread Reply:* no pressure if it's too late for you 🙂

*Thread Reply:* It's fine, I could probably mention something about it - the problem is that I have a standing commitment every Thursday from 5:30 to 7:30 PM (Polish time, GMT+1) which means I'm unable to participate. 😞

*Thread Reply:* @Kacper Muda would you be open to recording something? We could play it during the meeting. Something to consider if you’d like to participate but the time doesn’t work.

*Thread Reply:* Let me see how much time I'll have during the weekend and come back to You 🙂

*Thread Reply:* Sorry, I got sick and won't be able to do it. Maybe i'll try to make it personally to the next meeting, then the docs should already be released 🙂

*Thread Reply:* @Julien Le Dem are there updates on open proposals that you would like to cover?

*Thread Reply:* @Paweł Leszczyński as you authored about half of the changes in 1.7.0, would you be willing to talk about the Flink fixes? No slides necessary

*Thread Reply:* @Michael Robinson no updates from me

*Thread Reply:* sry @Michael Robinson, I won't be able to join this time. My changes in 1.7.0 are rather small fixes. Perhaps someone else can present them shortly.

I saw some weird behavior with openlineage-airflow where it will not respected the transport config for the client, even when setting the OPENLINEAGE_CONFIG to point to a config file.

the workaround is that if you set the OPENLINEAGE_URL env it will reach that and read the config.

this bug doesn’t seem to exist in the airflow provider since the loading method is completely different.

*Thread Reply:* would you mind creating an issue?

*Thread Reply:* will do. let me repro on the latest version of openlineage-airflow and see if I can repro on the provider

*Thread Reply:* config is definitely more complex than it needs to be...

*Thread Reply:* hmm.. so in 1.7.0 if you define OPENLINEAGE_URL then it completely ignores whatever is in OPENLINEAGE_CONFIG yaml

*Thread Reply:* if you don’t define OPENLINEAGE_URL, and you do define OPENLINEAGE_CONFIG: then openlineage is disabled 😂

*Thread Reply:* are you sure OPENLINEAGE_CONFIG points to something valid?

*Thread Reply:* ~look at the code flow for create:~ ~the default factory doesn’t supply config, so it tried to set http config from env vars. if that doesn’t work, it just returns the console transport~

*Thread Reply:* oh, no nvm. it’s a different flow.

*Thread Reply:* but yes, OPENLINEAGE_CONFIG works for sure

*Thread Reply:* on 0.21.1, it was working when the OPENLINEAGE_URL was supplied

*Thread Reply:* transport_config = None if "transport" not in self.config else self.config["transport"]

self.transport = factory.create(transport_config)

this self.config actually looks at

@property

def config(self) -> dict[str, Any]:

if self._config is None:

self._config = load_config()

return self._config

which then uses this:

```def loadconfig() -> dict[str, Any]:

file = _findyaml()

if file:

try:

with open(file) as f:

config: dict[str, Any] = yaml.safe_load(f)

return config

except Exception: # noqa: BLE001, S110

# Just move to read env vars

pass

return defaultdict(dict)

def findyaml() -> str | None: # Check OPENLINEAGECONFIG env variable path = os.getenv("OPENLINEAGECONFIG", None) try: if path and os.path.isfile(path) and os.access(path, os.R_OK): return path except Exception: # noqa: BLE001 if path: log.exception("Couldn't read file %s: ", path) else: pass # We can get different errors depending on system

# Check current working directory:

try:

cwd = os.getcwd()

if "openlineage.yml" in os.listdir(cwd):

return os.path.join(cwd, "openlineage.yml")

except Exception: # noqa: BLE001, S110

pass # We can get different errors depending on system

# Check $HOME/.openlineage dir

try:

path = os.path.expanduser("~/.openlineage")

if "openlineage.yml" in os.listdir(path):

return os.path.join(path, "openlineage.yml")

except Exception: # noqa: BLE001, S110

# We can get different errors depending on system

pass

return None```

*Thread Reply:* oh I think I see

*Thread Reply:* so this isn't passed if you have config but there is no transport field in this config

transport_config = None if "transport" not in self.config else self.config["transport"]

self.transport = factory.create(transport_config)

*Thread Reply:* here’s the config I’m using: ```transport: type: "kafka" config: bootstrap.servers: "kafka1,kafka2" security.protocol: "SSL"

# CA certificate file for verifying the broker's certificate.

ssl.ca.location=ca-cert

# Client's certificate

ssl.certificate.location=client_?????_client.pem

# Client's key

ssl.key.location=client_?????_client.key

# Key password, if any.

ssl.key.password=abcdefgh

topic: "SOF0002248-afaas-lineage-DEV-airflow-lineage" flush: True```

*Thread Reply:* it should load, but fail when actually trying to emit to kafka

*Thread Reply:* but it should still init the transport

*Thread Reply:* I’m testing on this image: ```FROM quay.io/astronomer/astro-runtime:6.4.0

COPY openlineage.yml /usr/local/airflow/

ENV OPENLINEAGE_CONFIG=/usr/local/airflow/openlineage.yml

ENV AIRFLOWCORELOGGING_LEVEL=DEBUG

ENV OPENLINEAGE_URL=http://foo.bar/```

*Thread Reply:* are you sure there are no permission errors?

*Thread Reply:* def _find_yaml() -> str | None:

# Check OPENLINEAGE_CONFIG env variable

path = os.getenv("OPENLINEAGE_CONFIG", None)

try:

if path and os.path.isfile(path) and os.access(path, os.R_OK):

return path

except Exception: # noqa: BLE001

if path:

log.exception("Couldn't read file %s: ", path)

else:

pass # We can get different errors depending on system

it checks stuff like

os.access(path, os.R_OK)

*Thread Reply:* I’m sure, because if I uncomment

ENV OPENLINEAGE_URL=<http://foo.bar/>

on 0.21.1, it works

*Thread Reply:* ah, I can add a permissive chmod to the dockerfile to see if it helps

*Thread Reply:* but I’m also not seeing any log/exception in the task logs

*Thread Reply:* will look at this later if you won't find solution 🙂

*Thread Reply:* one more thing, can you try with just

transport:

type: console

*Thread Reply:* but yeah, there’s something not great about separation of concerns between client config and adapter config

*Thread Reply:* adapter should not care, unless you're using MARQUEZ_URL... which is backwards compatibility for when it was still marquez airflow integration

I’m starting to put together the year-in-review issue of the newsletter and wonder if anyone has thoughts on the “big stories” of 2023 in OpenLineage. So far I’ve got: • Launched the Airflow Provider • Added static AKA design lineage • Welcomed new ecosystem partners (Google, Metaphor, Grai, Datahub) • Started meeting up and held events with Metaphor, Google, Collibra, etc. • Graduated from the LFAI What am I missing? Wondering in particular about features. Is iceberg support in Flink a “big” enough story? Custom transport types? SQL parser improvements?

Blog posts content about contributing to Openlineage-spark and code internals. The content comes from november meetup at google and I split it into two posts: https://docs.google.com/document/d/1Hu6clFckse1J_M1w2MMaTTJS0wUihtFsxbDQchtTVtA/edit?usp=sharing

Does anyone remember why execution_date was chosen as part of the runid for an Airflow task, instead of, for example, start_date?

Due to this decision, we can encounter duplicate runid if we delete the DagRun from the database, because the execution_date remains the same. If I run a backfill job for yesterday, then delete it and run it again, I get the same ids. I'm trying to understand the rationale behind this choice so we can determine whether it's a bug or a feature. 😉

*Thread Reply:* start_date is unreliable AFAIK, there can be no start date sometimes

*Thread Reply:* this might be true for only some version of airflow

*Thread Reply:* Also here where they define combination of some deterministic attributes, executiondate is used and not startdate, so there might be something to it. That still leaves us with the behaviour i described.

*Thread Reply:* > Due to this decision, we can encounter duplicate run_id if we delete the DagRun from the database, because the execution_date remains the same.

Hmm so given that OL runID uses the same params Airflow uses to generate the hash, this seems more like a limitation. Better question would be: if a user runs, deletes, then runs the same DAG again, is that an expected scenario we should handle? tl’dr yes, but Airflow hasn’t felt it important enough to address.

*Thread Reply:* not concurrent with Databricks conference this year? 😂

*Thread Reply:* nope, a week before so that everyone goes there and gets sick and can’t attend the databricks conf on the following week

*Thread Reply:* outstanding business move again

*Thread Reply:* @Willy Lulciuc wanna submit to this?

*Thread Reply:* yeah id love to: maybe something like “Detecting Snowflake table schema changes with OpenLineage events” + use cases + using lineage to detect impact?

*Thread Reply:* yeah! that sounds like a fun talk!

*Thread Reply:* idk if @Julien Le Dem will already be in 🇫🇷 that week? but I’d be happy to co-present if not.

*Thread Reply:* I don’t know when I’m flying out yet but it will be in the middle of that time frame.

*Thread Reply:* +1 on Harel co-presenting :)

*Thread Reply:* School last day is the 4th. I need to be in France (not jet lagged) before the 8th

*Thread Reply:* ok, @Harel Shein I’ll work on getting a rough draft ready before the deadline (added to my TODO of tasks)

hey, I'm not feeling well, will probably skip today meeting

Added comment to discussion about Spark parent job issue: https://github.com/OpenLineage/OpenLineage/issues/1672#issuecomment-1883524216 I think we have the consensus so I'll work on it.

*Thread Reply:* @Maciej Obuchowski should we give an update on that at the TSC meeting tomorrow?

Another issue: do you think we should somehow handle the partitioning in OpenLineage standard and integrations? I would think of a situation where we somehow know how dataset is partitioned - not think about how to automagically detect that. Some example situations:

- Job reads particular partitions of a dataset. Should we indicate this, possibly as InputFacet?

- Run writes a particular partition to a dataset. Would it be an useful information that some particular partition was written only by some run, while others were written by particular different runs, rather than treat output dataset as "global" modification of a dataset that possibly changes all the data?

*Thread Reply:* interesting. did you hear anyone with this usecase/requirement?

Hey, there’s a Windows user getting this error when trying to run Marquez: org-apache-tomcat-jdbc-pool-connectionpool-unable-to-create-initial-connection. Is it a driver issue? I’ll try to get more details and reproduce it, but if you know what this probably is related to, please lmk

*Thread Reply:* do we even support Windows? 😱

*Thread Reply:* Here’s more info about the use case:

Thanks Michael , this is really helpful , so I am working on prj where in I need to run marquez and open lineage on top of airflow dags which run dbt commands internally thru Bashoperator. I need to present to my org if we are going to be benefited by bringing in marquez matadata lineage

10:48

so was taking this approach of setting marquez first , then will explore how it integrates with airflow using bashoperator

*Thread Reply:* We don’t support this operator, right? What kind of graph can they expect?

*Thread Reply:* They can use dbt integration directly maybe?

*Thread Reply:* We now have a former astronomer as engineering director at DataHub

*Thread Reply:* https://www.linkedin.com/in/samantha-clark-engineer/

taking on letting users to run integration tests

*Thread Reply:* so there are two issues I think

*Thread Reply:* in Airflow workflows there are:

filters:

branches:

ignore: /pull\/[0-9]+/

which are set only for PRs with forked repos

*Thread Reply:* in Spark there are tests that strictly require env vars (that contain credentials to various external systems like databricks or bigquery). if there are no such env vars tests fail which is confusing new committers

*Thread Reply:* first behaviour is silent - which I think is bad because it’s easy to skip integration tests, build is green (but should it be? we don’t know, integration tests didn’t run and someone needs to know and remember that before approving and merging)

*Thread Reply:* second is misleading because it hints there’s something wrong in the code while it doesn’t neccessarily need to be. on the other hand you shouldn’t approve and merge failing build so you see there’s some action required

*Thread Reply:* reg. action required: for now we’ve been running some manual step (using https://github.com/jklukas/git-push-fork-to-upstream-branch) which is a workaround but it’s not straightforward and requires manual work. it also doesn’t solve two issues I mentioned above

*Thread Reply:* what I propose is to simply add approval step before integration tests: https://circleci.com/docs/workflows/#holding-a-workflow-for-a-manual-approval

it’s circleCI only thing so you need to login into circleCI, check if there’s any pending task to approve and then approve or not

*Thread Reply:* it doesn’t allow for much of configuration but I think it would work. you can’t also integrate it in any way with github UI (e.g. there’s no option to click something in PR’s UI to approve)

*Thread Reply:* but that would let project maintainers to manage when the code is safe to run and it’s still visible and (I think) readable for everyone

*Thread Reply:* the only thing I’m not sure about is who can approve

Anyone who has push access to the repository can click the **Approval** button to continue the workflow

but I’m not sure to which repo. if someone runs on fork and he/she has push access for fork - can he/she approve? it wouldn’t make sense..

*Thread Reply:* https://circleci.com/blog/access-control-cicd/

that’s best I could find from circleCI on this subject

*Thread Reply:* so I think the best solution would be to:

- add approval steps only before integration tests

- enable

Pass secrets to builds from forked pull requests(requires careful review of the CI process) - make sure release and integration tests contexts are set properly for tasks

*Thread Reply:* contexts give possibility to let users run e.g. unit tests in CI without exposing credentials

*Thread Reply:* this approach makes sense to me, assuming the permission model is how you outlined it

*Thread Reply:* one thing to add and test: approval steps could have condition to always run if it’s not from fork. not sure if that’s possible

*Thread Reply:* that sounds like it should be doable within what available in circle. GHA can definitely do that

*Thread Reply:* GHA can do things that circleCI can’t 😂

*Thread Reply:* > approval steps could have condition to always run if it’s not from fork. not sure if that’s possible ffs it’s not that easy to set up

*Thread Reply:* whenever I touch circleCI base changes I feel like magician. yet, here goes the PR with the magic (just look at the side effect, it bothered me for so long 🪄 ) 🚨 🚨 🚨 https://github.com/OpenLineage/OpenLineage/pull/2374

*Thread Reply:* I'm assuming the reason for the speedup in determine_changed_modules is that we don't go install yq every time this runs?

What are your nominees/candidates for the most important releases of 2023? I’ll start (feel free to disagree with my choices, btw): • 1.0.0 • 1.7.0 (disabled the external Airflow integration for 2.8+) • 0.26.0 (Fluentd) • 0.19.2 (column lineage in SQL parser) • …

*Thread Reply:* • 1.7.0 (disabled the external Airflow integration for 2.8+) that doesn’t sound like one of the most important

*Thread Reply:* 1.0.0 had this: https://openlineage.io/docs/releases/1_0_0 which actually fixed spec to match JSON schema spec

@Gowthaman Chinnathambi has joined the channel

1.8 changelog PR: https://github.com/OpenLineage/OpenLineage/pull/2380

FYI: I'm trying to set up our meetings with the new LFAI tooling, you may get some emails. you can ignore for now.

@Krishnaraj Raol has joined the channel

@Harel Shein @Julien Le Dem @tati Python client releases on both Marquez and OpenLineage are failing because PyPI no long supports password authentication. We need to configure the projects for Trusted Publishers or use an API token. I’ve looked and can’t find OpenLineage credentials for PyPI, but if I had them we’d still need to set up 2FA in order to make the change. How should we proceed here? Should we sync to sort this out? Thanks (btw I reached out to Willy separately when this came up during a Marquez release attempt last week)

*Thread Reply:* ah! I can look into it now.

*Thread Reply:* I'm failing to find the credentials for PyPI anywhere.

*Thread Reply:* @Maciej Obuchowski any ideas? (git blame shows you wrote that part, and @Michael Collado did some circleCI setup at some point)

*Thread Reply:* I just sent a reset password email to whoever registered for the openlineage user

*Thread Reply:* Thanks @Harel Shein. Can confirm I didn’t get it

*Thread Reply:* The password should be in the CircleCI context right?

*Thread Reply:* yes, it's there. I was trying to avoid writing a job that prints it out

*Thread Reply:* that will be the fallback if no one responds 🙂

*Thread Reply:* alright, I've setup 2FA and added a few more emails to the PyPI account as fallback.

*Thread Reply:* unfortunately, there's only one Trusted Publisher for PyPI, which is GH Actions. so we'll have to use the API token route. PR incoming soon

*Thread Reply:* didn't need to make any changes. I updated the circle context and re-ran the PyPI release - we're back to :largegreencircle:

*Thread Reply:* ^ @Michael Robinson FYI

*Thread Reply:* thank you @Harel Shein. Releasing the jars now. Everything looks good

It looks like my laptop bit the dust today so might miss the sync

Do I have to create account to join the meeting?

*Thread Reply:* turns out you can just pass your mail

*Thread Reply:* same on my side

*Thread Reply:* i don't remember if i have one

I did something wrong, meeting link should we good now: https://zoom-lfx.platform.linuxfoundation.org/meeting/91671382513?password=424b74a1-43fa-4d0e-885f-c9b5417cf57b

*Thread Reply:* use same e-mail you got invited to

there's a data warehouse (https://www.firebolt.io/) and a streaming platform (https://memphis.dev/) written in Go. so I guess it's not futile to write a Go client? 🙂

Any potential issues with scheduling a meetup on Tuesday, March 19th in Boston that you know of? The Astronomer all-hands is the preceding week

PR to add 1.8 release notes to the docs needs a review: https://github.com/OpenLineage/docs/pull/274

*Thread Reply:* thanks @Jakub Dardziński

Feedback requested on this draft of the year-in-review issue of the newsletter: https://docs.google.com/document/d/1MJB9ughykq9O8roe2dlav6d8QbHZBV2A0bTkF4w0-jo/edit?usp=sharing. Did you give a talk that isn't in the talks section? Is there an important release that should be in the releases section but isn't? Other feedback? Please share.

Feedback requested on a new page for displaying the ecosystem survey results: https://github.com/OpenLineage/docs/pull/275. The image was created for us by Amp. @Julien Le Dem @Harel Shein @tati

*Thread Reply:* Looks great!

*Thread Reply:* Very cool indeed! I wonder if we should share the raw data as well?

*Thread Reply:* Maybe if you could share it here first @Michael Robinson ?

*Thread Reply:* Yes, planning to include a link to the raw data as well and will share here first

*Thread Reply:* @Harel Shein thanks for the suggestion. Lmk if there's a better way to do this, but here's a link to Google's visualizations: https://docs.google.com/forms/d/1j1SyJH0LoRNwNS1oJy0qfnDn_NPOrQw_fMb7qwouVfU/viewanalytics. And a .csv is attached. Would you use this link on the page or link to a spreadsheet instead?

*Thread Reply:* Going with linking to Google's charts for the raw data for now. LMK if you'd prefer another format, e.g. Google sheet

*Thread Reply:* was just looking at it, looks great @Michael Robinson!

*Thread Reply:* Excellent work, @Michael Robinson! 👏 👏 👏

This looks nice!

*Thread Reply:* @Jakub Dardziński @Maciej Obuchowski is the Airflow Provider stuff still current?

*Thread Reply:* yeah, looks current

@Laurent Paris has joined the channel

Hey, created issue around using Spark metrics to increase operational ease of using Spark integration, feel free to comment: https://github.com/OpenLineage/OpenLineage/issues/2402

@Lohit VijayaRenu has joined the channel

Flyte is offering a 20-25-minute speaking slot at their community meeting on March 6th at 9 am PT. They'd like it to be a general introduction to OpenLineage

*Thread Reply:* I can take it if no one else is interested. I’ll be doing a lot of intro to OL presentations in the next few weeks, so I’ll be very practiced by then :)

@Emili Parreno has joined the channel

@Paweł Leszczyński / @tati I'm expecting at least 5 pictures from the meetup today! 😄

*Thread Reply:* could we also get a signup sheet and headcount please? 😬

Hi, is there any reason not to perform a release today as scheduled? I know we released 1.8 only one week ago, but it's the first of the month and @Damien Hawes's PR #2390 to add support for Scala 2.12 and 2.13 in Spark, along with fixes in the Spark and Flink integrations, are unreleased. Would it make more sense to wait for Damien's PR #2395?

*Thread Reply:* This isn't ready.

*Thread Reply:* I'm working on enabling integration tests for the Scala 2.13 variants.

*Thread Reply:* It will take some time, probably Monday / Tuesday next week is my ETA.

*Thread Reply:* Thanks @Damien Hawes, no pressure. But if early next week is your estimate I think it makes sense to wait. So this is GTK

*Thread Reply:* marquez in the wild! 💯💯🚀. thanks for sharing!

*Thread Reply:* they're doing anomaly detection successfully

*Thread Reply:* eg, deprecated tables still being used or tables written in multiple locations

*Thread Reply:* this needs to become a blog post!

*Thread Reply:* Would love to get the slides if they are willing to share!

*Thread Reply:* They've said yes to a blog post. This presentation gets us closer to starting in earnest. I've asked for the slides. Too bad the Confluent organizer wasn't supportive of recording. Maybe next time

Congratulations to our new committer on the team @Damien Hawes!!

*Thread Reply:* hey @Damien Hawes, now you can push your branches into origin and the integration tests are automatically approved 🙂

*Thread Reply:* Thank you for the congratulations @Harel Shein. It is humbling to be nominated and accepted.

@Maciej Obuchowski - haha, thanks!

*Thread Reply:* Congratulations @Damien Hawes! Thank you for all your contributions

https://opensource.googleblog.com/2024/02/announcing-google-season-of-docs-2024.html maybe we should improve our docs? 🙂

Agenda items or discussion topics for Thursday's TSC? @Julien Le Dem @Harel Shein

*Thread Reply:* I can take 5 minutes explaining Spark job hierarchy

*Thread Reply:* Nothing specific on my end

*Thread Reply:* more updates on the Spark side of things? @Paweł Leszczyński / @Damien Hawes may want to talk about the recent additions? we could also discuss the proposals for circuit breakers / metrics?

Anyone have an opinion about creating an OpenLineage "company" rather than a group on LinkedIn? You can get metrics from LinkedIn's API if you have a company rather than a group.

*Thread Reply:* Airflow does it: https://www.linkedin.com/company/apache-airflow/

*Thread Reply:* Spark too https://www.linkedin.com/company/apachespark/

*Thread Reply:* I think that's a good idea

We have agreement from Astronomer to move ahead with the Orbit changes discussed today in the committers sync. So I'll start on the exports asap.

Please follow our new OpenLineage company page on LinkedIn. Evidently, the only way to join the company is to add it to your experience history.

FYI: deadline Feb 25th https://2024.berlinbuzzwords.de/call-for-papers/

*Thread Reply:* @Peter Hicks want to submit a column-level lineage talk?

*Thread Reply:* we'll probably submit something about OpenLineage/Streaming with @Paweł Leszczyński

*Thread Reply:* @Willy Lulciuc want to come to Berlin? 😄

*Thread Reply:* we were thinking with Maciej about some kind of Flink & Streaming around OpenLineage talk, as this can be interesting to the community. I'll prepare abstract next wek

*Thread Reply:* updated the talks project on github

*Thread Reply:* @Maciej Obuchowski i wish, i’d need a sponsor 😅

This open-source community management tool looks interesting as a supplement to Orbit: https://ossinsight.io/analyze/OpenLineage/OpenLineage#overview

*Thread Reply:* you can compare projects side-by-side, which is something Orbit doesn't offer

Metaplane added an airflow provider to send data lineage data to. It's basically a new connection that extends BaseHook, and users need to proactively send callbacks, not sure why the took that approach. https://www.metaplane.dev/blog/airflow-integration

*Thread Reply:* If you're doing all that work, you could actually just dag_policy to add it to all the dags automatically

*Thread Reply:* https://docs.metaplane.dev/docs/airflow#dag-and-task-lineage I think that's fairly... unsophisticated approach?

*Thread Reply:* However, if I was redoing Airflow integration from scratch, I'd seriously rethink using connections instead of OPENLINEAGE_URL or configuring it the way we did

*Thread Reply:* The plugin could load up custom transport types and generate connection types based out of it

*Thread Reply:* Just curious why they did not use listeners... it's not like it's new feature now, it has been there for like 5 minor releases

*Thread Reply:* we still could add support from Airflow connections with some sort of deprecation of OPENLINEAGE_URL and current way

Hey, i was working on PR updating the docs for python, java and airflow (probably spark is next), and it hit me that we still have those in two places: README.md inside the package and openlineage.io site. Both contain quite the same information, sometimes the site has more (f.e. airflow). Do You think it would be good idea to just put a redirect to the site in README.md files for the packages? Maybe add some brief description at the top and then redirect user to the site? In long term, maintaining both and keeping them in sync is not an optimal solution imho.

*Thread Reply:* I agree -- in addition to the maintenance burden there's the risk posed by out-of-date/conflicting info for users

*Thread Reply:* I've wanted to remove README.md files as user-facing docs for some time.

However, there might be worth keeping those (maybe under different name) for purely internal development docs - not related to external API's, but like - use this incantation to compile the integration.

*Thread Reply:* I added the redirect here https://github.com/OpenLineage/OpenLineage/pull/2448

There was not much information about the compilation and other internal stuff, so i think first they need to be created and then we can keep them inside the package files under some different name, as Maciej mentioned.

2023 OpenLineage Survey Analysis/takeaways What surprised you or struck you as notable in the 2023 survey data? What would you like to see added, changed or removed in the 2024 version? I need your help to ensure we get the most useful and actionable insights we can from this exercise. I created a doc for sharing opinions/comments, but I'd be happy to discuss it in any forum. Here's the doc, including some initial takeaways, as a starting point: https://docs.google.com/document/d/1aiKtKjcFU0AjS46cow6cbx8EvzV0P2KnGQ4rFD4qKLM/edit?usp=sharing.

@Damien Hawes can we share Gradle plugins that you've implemented in Spark buildSrc with Flink integration too? It would cut down on boilerplate, but not sure how can we do this (without copying code) 🙂

*Thread Reply:* You have to publish the plugin to the Gradle Plugin repositoriy

*Thread Reply:* Seems like we could publish the plugin to the local dir first: https://docs.gradle.org/current/userguide/plugins.html#sec:custom_plugin_repositories

@Maciej Obuchowski in our OL spec, we require _prodcuer and _schemaURL , but our airflow provider, we only send the _producer. Was this an intentional omission of _schemaURL?

ahh i think I was confused given it’s marked as _base_skip_redact here, but looks like _schemaURL is being added… need to verify

*Thread Reply:* schemaURL is always send, the thing is it’s incorrect in many cases AFAIR

*Thread Reply:* yeah… we’re validating events and most (if not all) don’t have that field populated. does the airflow provider not set it?

*Thread Reply:* airlfow provider uses facets from openlineage-python package

*Thread Reply:* > thing is it’s incorrect in many cases AFAIR more curious about this comment. why would this be the case?

*Thread Reply:* tbh I’m not sure, URL for the base schema is correct only for https://raw.githubusercontent.com/OpenLineage/OpenLineage/main/spec/OpenLineage.json and facets in it (which is not too many). and for others it was not just done or mistakenly repeated with the same pattern?

*Thread Reply:* dunno, looks more like historical approach that wasn’t adjusted at some point

*Thread Reply:* and.. schemaURL is apparently irrelevant since noone validated it and reported issues

*Thread Reply:* is there an open issue for this? I feel we should have some guidance here or not require it, but we should decide what we do here

*Thread Reply:* elephant in the room

*Thread Reply:* no, I don’t think there is one

*Thread Reply:* ok, I’ll open one. we’re ingestion events into kafka and was incorrectly applying validation to events based on the spec

*Thread Reply:* @Julien Le Dem ping to address the elephant above 😉 we’re talking about in this thread

*Thread Reply:* I see. btw generating python facets from json schema would be best solution, it was too complex so far ;_;

*Thread Reply:* ok, I’ll summarize our discussion in the issue

*Thread Reply:* thanks Willy!

*Thread Reply:* @Willy Lulciuc double-checking - given the example of SQLJobFacet should the schema URL be set to: https://raw.githubusercontent.com/OpenLineage/OpenLineage/main/spec/facets/SQLJobFacet.json or https://raw.githubusercontent.com/OpenLineage/OpenLineage/main/spec/facets/SQLJobFacet.json#/$defs/SQLJobFacet or https://openlineage.io/spec/facets/1-0-0/SQLJobFacet.json#/$defs/SQLJobFacet?

or in other words - should it be the same as it is in Java client? 🙂 which is the last one

Abdallah (Decathlon) made a release request today. https://openlineage.slack.com/archives/C01CK9T7HKR/p1708514231690979

*Thread Reply:* I would ask that if we make a release today, we include the Scala 2.13 support for Spark (and merge the PR for the docs)

*Thread Reply:* I guess we have to decide splitting Iceberg off from the main code a reason to hold back the release?

*Thread Reply:* Thoughts @Maciej Obuchowski?

*Thread Reply:* I think we can do a release today/tomorrow, but having functionality removed makes this much harder choice

*Thread Reply:* What functionality would be removed?

*Thread Reply:* Iceberg support? Or do you propose something else?

*Thread Reply:* Oh, I was under the impression that Iceberg support wasn't going to be removed. Instead the direct dependencies on Iceberg in the core code were being removed, and bundled into their own module, but at the end of the day, the project would still contain classes capable of dealing with Iceberg.

*Thread Reply:* I understood that without https://github.com/OpenLineage/OpenLineage/pull/2437/files there will be no support for Iceberg for 2.13

*Thread Reply:* so I guess we can go and then follow up with next release soon?

*Thread Reply:* There should still be Iceberg support for 2.13

*Thread Reply:* If there wasn't, that would hurt us, and by us, I mean the team I belong to @ Booking and my partner teams.

*Thread Reply:* Basically, if I understand Mattia's direction is, we want to say:

OK, OpenLineage has been tested against these versions of Iceberg and found to be working.

*Thread Reply:* Just to confirm, are we waiting for this one https://github.com/OpenLineage/OpenLineage/pull/2446?

*Thread Reply:* We're waiting for comments on this: https://openlineage.slack.com/archives/C01CK9T7HKR/p1708349868363669

*Thread Reply:* No-one has left comments

*Thread Reply:* Which means, at least in my opinion, no-one else has anything to say.

*Thread Reply:* +1. It's been over 48 hrs, so seems safe to go ahead

*Thread Reply:* @Maciej Obuchowski - I'm going to merge that PR, ye?

*Thread Reply:* (First it needs an approval)

*Thread Reply:* Pawel is OOO, I believe

*Thread Reply:* Aye, but I believe @Maciej Obuchowski can approve.

*Thread Reply:* :gh_approved:

*Thread Reply:* Working on the changelog now

*Thread Reply:* oops, forgot about the release vote. please +1

*Thread Reply:* it got merged 👀

*Thread Reply:* amazing feedback on a 10k line PR 😅

*Thread Reply:* maybe they have policy that feedback starts from 10k lines

*Thread Reply:* it wasn’t enough

*Thread Reply:* too big to review, LGTM

*Thread Reply:* sounds like an easy fix? we have time

*Thread Reply:* Easy fix? Yeah - not at all.

*Thread Reply:* Allright, putting the release on hold, then

*Thread Reply:* yeah - we could end up with Spark 2 dependency when using it in Spark 3 context, and that's not good

*Thread Reply:* oh, unless you mean compile time dependency on Spark 2

*Thread Reply:* then no, we need to have it, everything in lifecycle package depends on it 🙂

The idea is that it contains code common to all Spark versions, that Spark itself mostly does not change - and have spark2/3/... directories for things that specifically diverge from baseline

*Thread Reply:* I assume Paweł ment should not have Spark dependency as it should not depend on particular Spark version

*Thread Reply:* Correct me if I'm wrong, but it sounds safe to proceed. So here's the changelog PR: https://github.com/OpenLineage/OpenLineage/pull/2452

*Thread Reply:* It's safe

*Thread Reply:* @Michael Robinson let's wait with release till we solve the intermittent test failing issue https://openlineage.slack.com/archives/C065PQ4TL8K/p1708609977907359

Any idea/preference where to put very specific doc information? For example: if you're running Spark in this specific way, do this. Separate doc page seems like overkill, but I'm not sure where to put something like this where it would be discoverable

*Thread Reply:* Maybe the information could go on a new stub about "Special Cases" or something (not a great title but don't know the use case). That way the page isn't just about the exceptional case?

*Thread Reply:* Can we not trust search?

@Michael Robinson do we know when the next release will be going out? I’d sneak in some feedback this week on: • https://github.com/OpenLineage/OpenLineage/pull/2371 • and some of the circuit breaker work if it’s not too late @Paweł Leszczyński @Maciej Obuchowski 😉

*Thread Reply:* it almost happened today, so tomorrow? 🤞

*Thread Reply:* still provide feedback anyway @Willy Lulciuc!

*Thread Reply:* will do! I’ll get some feedback in tmr

Hey, i made a PR that updates the OL Airflow Provider documentation (removing outdated stuff, moving some from current OL docs, adding new info). It's not a small one, but i think it's worth the time. Any feedback is highly appreciated, let me know if something is missing, is not clear or simply wrong 🙂

@Damien Hawes there have been some random test failures after merging last 2.13 PR, for example https://app.circleci.com/pipelines/github/OpenLineage/OpenLineage/9437/workflows/4eda8d67-3bd1-4527-84fa-88c19e6774bd/jobs/179622 ```> Task :app:copyIntegrationTestFixtures

Too long with no output (exceeded 10m0s): context deadline exceeded``

hanging oncopyIntegrationTestFixtures`?

*Thread Reply:* Ah, it happened also before latest PR https://app.circleci.com/jobs/github/OpenLineage/OpenLineage/179357

*Thread Reply:* I wonder if the disk is full of that particular executor

*Thread Reply:* Its always failing at the :app:copyIntegrationTestFixtures step

*Thread Reply:* Because I'm not able to replicate this on my local

*Thread Reply:* yeah I can't replicate that too

*Thread Reply:* I'm rerunning with SSH on CI, will take a look at disk space